Three Easy Steps For Consolidating Oracle Databases into Cloud Virtual Machines

Tags:

MySQL,

Technical Track,

Net_Read_Timeout,

Wait_Timeout,

Net_Write_Timeout,

Interactive_Timeout

Problem

Having decided to move our Oracle databases to the cloud, we now want to build equivalent VMs as database servers. This is simple enough when we have only one or a very small number of databases. But this can be a complex task when our environment has multiple Oracle databases. Technologies such as Oracle RAC will further complicate things. Why would we not use the Oracle database as a managed service instead? Short answer: In our case, the cloud vendor of choice does not offer this service, or possibly we are just trying to compare the costs for deciding if managed services are better (they are, but that is another blog post!) Hence, we’ll have questions such as:- How do we estimate our VM footprint for hosting our Oracle databases in the cloud? (Finding the balance between over-provisioning and consolidation.)

- How many VMs will we need?

- What sizes for vCPU and RAM should we be aiming for?

Basic ideas

A "physical" Oracle database server uses three measurable resources:- CPU (to run server and background processes)

- Memory - RAM (to cache parts of the database and provide working buffers used by the server and background processes)

- Disk (to store and retrieve the data )

- CPU used by active sessions as Average Active Sessions (AAS)

- RAM allocated (SGA and PGA settings)

- Disk

- Throughput Megabytes per second (MBPS)

- IO operations IOPS ( IO per second)

- Filesystem (or ASM) space usage (GB)

The Cloud VM as a DB Server

Every cloud VM is also allocated CPUs, RAM and disks, but with a few important differences.- vCPUs are not "full" hyper-threaded CPUs, and we need to provide a conversion factor to convert AAS to vCPUs. After comparing results from www.spec.org, we decided for our purposes (and for our specific cloud provider) that AAS*1.5 = 1 vCPU.

- All access to disk is via a network, and network access is limited (max 2GBPS/vCPU in our case).

- MBPS and IOPS may be dependent on the type, size of disk and the vCPUS used in the VM.

- We will keep a few vCPUs unused to provide for the OS and tools that may be running on the VM.

The Process

We are going to choose the ideal VM sizes that will host all five of our example databases by answering a few questions. Our tools are a Jupyter notebook running Python 3.7 and a sqlite database holding the collected data.1. What are we are trying to maximize ?

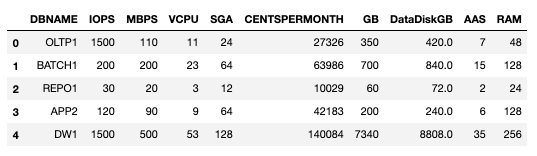

Typically, we want to utilize all the resources that we pay for each month. So each month we want to maximize VMUSAGE where: VMUSAGE = (Cents paid for a VM / Cents wasted ) Cents wasted = Cents paid for - Cents actually Utilized This makes sense. We do not want to pay for vCPUS and RAM that we do not use. But it is still a bit ambivalent. We can get the same VMUSAGE with different sizes of VMs. So how do we get VMUSAGE to be higher for our ideal choice? After a bit of thought, it is clear that we must add "weightage" to larger VMs -- we want to choose the largest VM where we waste the least . Since bigger VMs cost more, let's multiply with the cents we pay for each VM as a weightage. So our (new) VMUSAGE factor that we seek to maximize is VMUSAGE = (Cents paid for a VM) * (Cents paid for a VM / Cents wasted ) If Cents wasted = 0 then set Cents wasted = 0.0001 Note 1: If we are not wasting anything, VMUSAGE will be infinite. So we can put a boundary condition for that in our code. That is the reason for the “if” caveat. Note 2: It does not have to be this way all the time! We could be solving for a VMUSAGE calculated differently. Let's just work with this one for now ... it seems to do the job well enough. So now we are ready for the first VM TYPE and we are evaluating VMUSAGE after we pick databases for it.2. Given a VM Type, which databases do we choose to put in it?

This is a classical Bin packing problem: see https://developers.google.com/optimization/bin/knapsack to understand this, including some sample Python code blocks that show how to solve this problem using the Google OR-Tools collection of libraries and APIs. However, in our case, we will use the multi-dimensional Solver with the many capacity constraints that our VMs have. Let us call our Solver (in the OR-Tools Knapsack APIs called by the Python block) "DBAllocate": solver = pywrapknapsack_solver.KnapsackSolver( pywrapknapsack_solver.KnapsackSolver.KNAPSACK_MULTIDIMENSION_CBC_MIP_SOLVER, "DBAllocate") The Solver takes three inputs, all of which are arrays:- Value: The "intrinsic value" of picking a specific DB. Since we are trying to maximize resource utilization, this is simply the cents used for running the DB in the VM (again this is a simplification, we could calculate this differently; we could score each DB by its "criticality," for example)

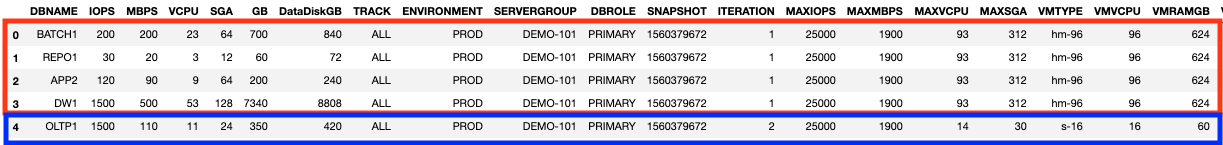

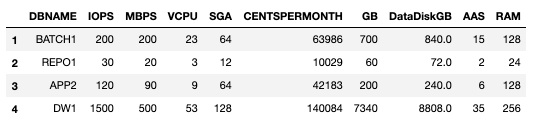

- Observations: This is the resources that each DB utilizes from the capacities. We are choosing IOPS, MBPS, VCPU, SGA as the four resources that each DB will use from the VM. This data is sourced from our AWR mining script outputs. Notice we did not include the data disk size which is not affected by the VM type that we select.

- Capacities: The VM has fixed limits for IOPS, MBPS, VCPU, SGA which we will read from our capacities table. The values in the capacities table should been have chosen after allowing for a little bit of overhead for growth and also measurement inaccuracies, so we are using our data engineering experience here.

3. Rinse/repeat until we allocate all databases

First Iteration

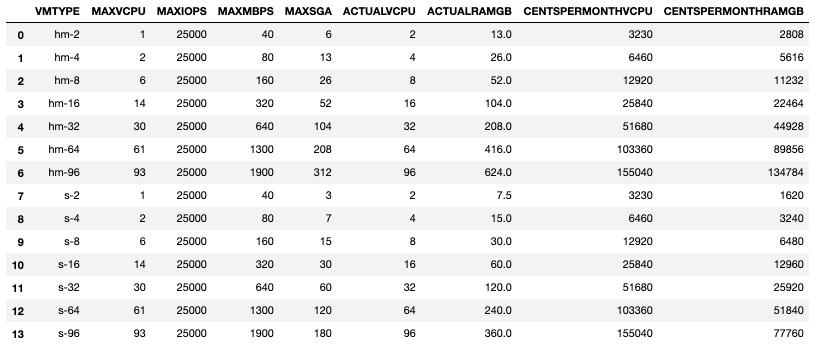

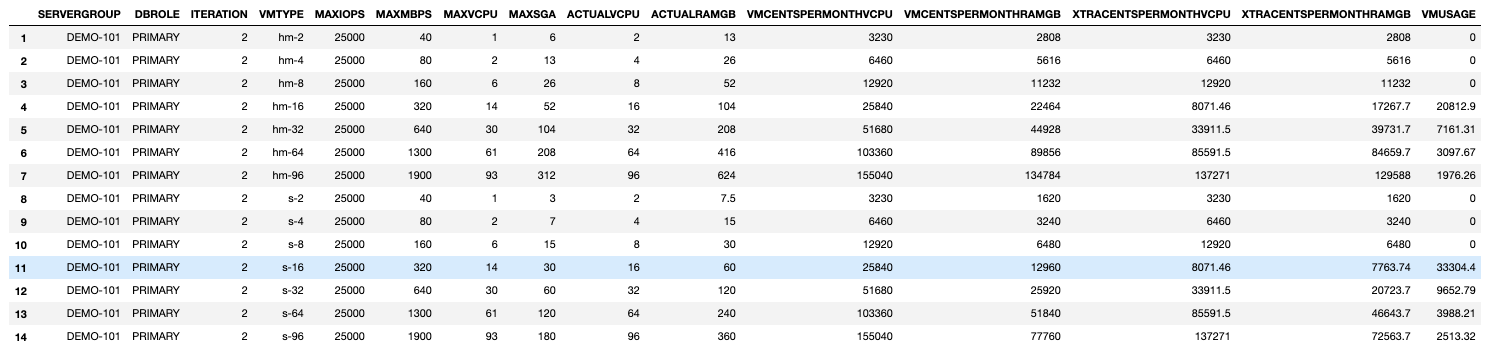

We are allowing the Knapsack Solver to pick the databases for us for all VM types and then calculate VMUSAGE for the database selection for each VM Type. The Solver goes through all of the possible permutations to find the optimal configuration. The VMUSAGE shows up very different values for all VM types, higher numbers are better! VMs that cannot accommodate any databases are showing a VMUSAGE of 0. For Iteration 1 the highest VMUSAGE is for a VM of type: hm-96 (Row 7)

The Solver determines that the VM hm-96 will contain the following databases (it has used 88 of the available 93 vCPUs).

For Iteration 1 the highest VMUSAGE is for a VM of type: hm-96 (Row 7)

The Solver determines that the VM hm-96 will contain the following databases (it has used 88 of the available 93 vCPUs).

Second Iteration

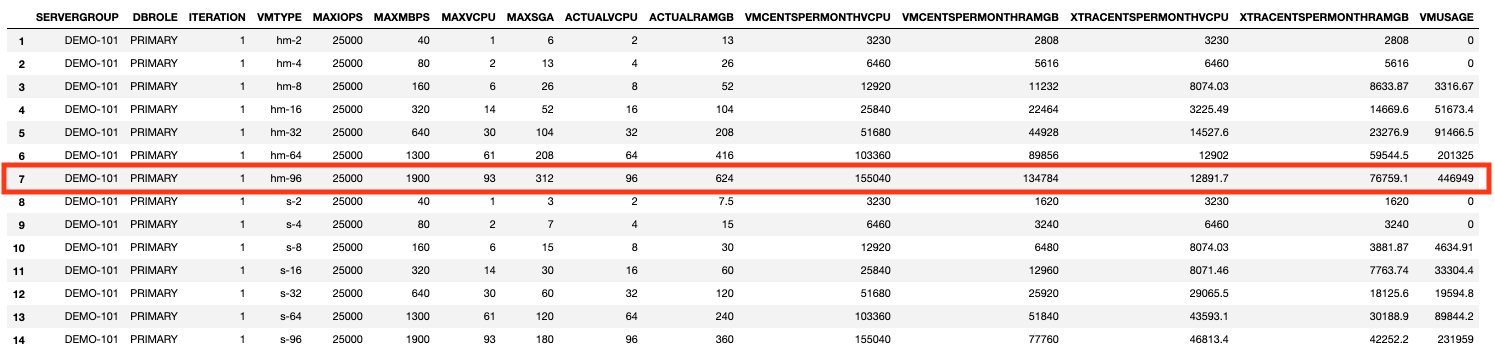

After we remove the databases already selected in the first Iteration, we are left with only 1 database to allocate! The code runs the second iteration automatically as long as there are databases yet to be allocated. Re-running the VMUSAGE calculation to find the best VM to hold the last remaining database

The Solution

In our example, we can do a dense packing of our five databases in two kinds of VMs to minimize expected costs. The VM type hm-96 hosts the first four databases (BATCH1, REPO1, APP2, DW1) and the VM type s-16 hosts one database (OLTP1).