Container orchestrators and persistent volumes - part 1: DC/OS

One key fac

tor on running stateful services (i.e., database servers) on container-centric, managed environments is portability: you need the dataset to follow your container instance. I spent some time reviewing how external persistent volumes are handled when working with DC/OS in AWS.

One key fac

tor on running stateful services (i.e., database servers) on container-centric, managed environments is portability: you need the dataset to follow your container instance. I spent some time reviewing how external persistent volumes are handled when working with DC/OS in AWS.

DC/OS on AWS in 15 minutes

I first started deploying the latest version of DC/OS on AWS using the cloud formation template provided by Mesosphere. Master HA is not relevant or required for a lab so I went with the Single master deployment.

Once your DC/OS CloudFormation deployment is ready you will get a URL for the web console in the output section, under DnsAddress. You can use Google, Github or Microsoft SSO credentials to log into the console.

Experimenting with external persistent volumes

Let’s deploy a simple MySQL instance from the DC/OS catalog (DC/OS GUI -> Catalog -> Search -> MySQL).

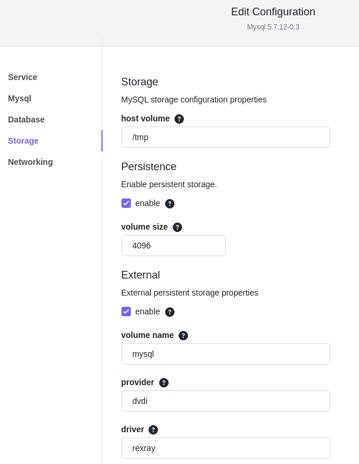

In the service configuration, we will make the data volume “persistent” and “external”.

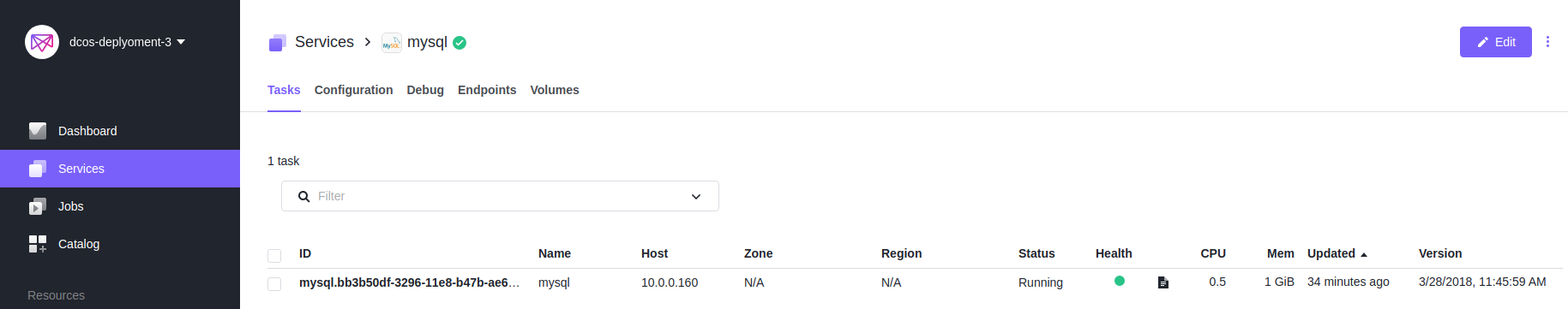

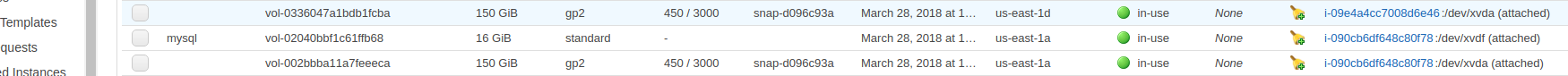

After launching the service you will notice that an EBS volume was created with the name specified in the “volume name” field. That volume is also mounted on the slave node where the MySQL service is running (to see the IP of the node, go to Services -> mysql).

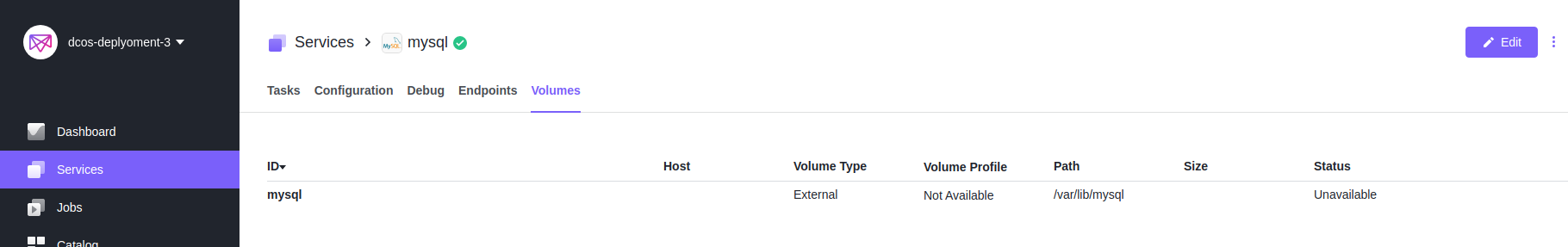

You can also see any volumes associated with a service by clicking in the Volumes tab:

So at this point we have a MySQL instance running within a container on node 10.0.0.160, and since we requested a persistent, external volume and we are in AWS, DC/OS took care of it, provisioned an EBS volume and made the necessary arrangements for the data directory to be stored there. Pretty neat isn’t it?

Now let’s see how DC/OS deals with node failures by forcing one on the node where the MySQL instance is running (10.0.0.160 in this case). To make things more interesting, I created a table, and inserted some data.

Something worth mentioning here is that the CloudFormation template used will make slave nodes part of autoscale groups, so if you try to stop/terminate the instance, new instances will be provisioned. Furthermore, if the instance is restarted, the service will be migrated, but it may die if the failed server comes back to life as DC/OS will not be able to detach the EBS volume.

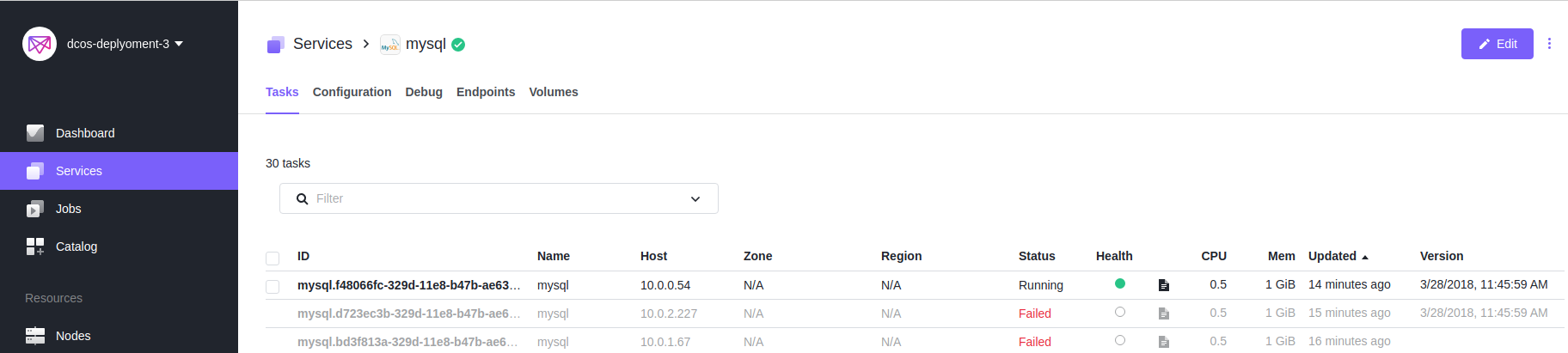

I was able to get the MySQL service migrated by restarting the node, but as you can see in the image below, I ran into the issue mentioned above: the service failed and retried until I shutdown the 10.0.0.160 instance for the volume to be detached.

After DC/OS detects that the node is unreachable, it will start migrating the service to an online node. After the service is marked as Healthy (Green) again, we can check the slave node IP where it is running. Furthermore, if we check the EBS volume, it will now appear as attached to the new slave node, and the data is accessible again.

REXRay

REXRay is the technology in charge of provisioning, mounting and unmounting the EBS volumes to EC2 instances and making the mount point available to the container. You will find rexray running as a daemon on the DC/OS slave nodes and containers running with the --volume-driver=rexray parameter. REXRay configuration files specifies “ebs” as the service and the CloudFormation template assigns the necessary roles to the compute instances for interaction with the EBS APIs. Running a docker inspect on the running MySQL instance will reveal the EBS mount point, the associated container path and the driver in use:

[code language="bash"] ip-10-0-0-54 ~ # docker inspect 182c97e9d4e4 | jq .[].Mounts [ { "Name": "mysql", "Source": "/var/lib/libstorage/volumes/mysql/data", "Destination": "/var/lib/mysql", "Driver": "rexray", "Mode": "rw", "RW": true, "Propagation": "rprivate" }, [/code]Conclusions

We proved that DC/OS will automatically and transparently take care of moving your external persistent volumes (EBS in this case) to the slave nodes where the associated service tasks are running. It is important to highlight that EBS volumes cannot be detached from running instances.

Finally, even though you could deploy a stand alone database instance as described here, Galera cluster may be a better choice for running MySQL on DC/OS (as explained in this Percona Live 2018 session).