How to deploy a Cockroach DB Cluster in GCP? Part II

In the first part of the blog, we determined the different requirements our cluster needed, and we planned all the details of the cluster. Then we created the VPC structure and the CDB node template we will use to build all the nodes. To have an idea of the solution we want to implement, please take a look at this high-level diagram. After creating the remaining nodes, we will need to create an Instance Group for each of the nodes. Then we will add those IG to the backend of the Load Balancer. This way, we can load balance against the 9 nodes of the cluster.

Create Cluster

Let’s go ahead and create the nodes we need completed our 9 nodes cluster.

The following commands can be executed from the GCP Cloud Shell. It will use the Machine Image created in the first part of the blog and will create nodes in each of the subnets across the different regions. (Replace –metadata parameter)

gcloud beta compute instances create cdb-cluster-node2 --project=cdbblog --zone=us-central1-b --machine-type=n2d-standard-4 --network-interface=subnet=private-us-central1-b,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node3 --project=cdbblog --zone=us-central1-c --machine-type=n2d-standard-4 --network-interface=subnet=private-us-central1-c,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node4 --project=cdbblog --zone=europe-southwest1-a --machine-type=n2d-standard-4 --network-interface=subnet=private-europe-southwest1-a,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node5 --project=cdbblog --zone=europe-southwest1-b --machine-type=n2d-standard-4 --network-interface=subnet=private-europe-southwest1-b,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node6 --project=cdbblog --zone=europe-southwest1-c --machine-type=n2d-standard-4 --network-interface=subnet=private-europe-southwest1-c,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node7 --project=cdbblog --zone=southamerica-east1-a --machine-type=n2d-standard-4 --network-interface=subnet= private-southamerica-east1-a,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node8 --project=cdbblog --zone=southamerica-east1-b --machine-type=n2d-standard-4 --network-interface=subnet= private-southamerica-east1-b,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image gcloud beta compute instances create cdb-cluster-node9 --project=cdbblog --zone=southamerica-east1-c --machine-type=n2d-standard-4 --network-interface=subnet= private-southamerica-east1-c,no-address –metadata****** --maintenance-policy=MIGRATE --provisioning-model=STANDARD --service-account=458159664907-compute@developer.gserviceaccount.com --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append --min-cpu-platform=Automatic --tags=cdb,private --no-shielded-secure-boot --shielded-vtpm --shielded-integrity-monitoring --reservation-affinity=any --source-machine-image=cdb-cluster-node-image

To effectively balance the load across the cluster, GCP offers a fully managed TCP Proxy Load Balancer. This service lets you use a single IP address for all users around the world, automatically routing traffic to the instances that are closest to the user. For each of the AZ running a node we would need to create a distinct instance group. Let’s go ahead and create all the instance groups. These would need to point to the 26257 port, the CDB default port.

gcloud compute instance-groups unmanaged create ig-cdb-cluster-us-central1-a --project=cdbblog --zone=us-central1-a gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-us-central1-a --project=cdbblog --zone=us-central1-a --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-us-central1-a --project=cdbblog --zone=us-central1-a --instances=cdb-cluster-node1

gcloud compute instance-groups unmanaged create ig-cdb-cluster-us-central1-b --project=cdbblog --zone=us-central1-b gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-us-central1-b --project=cdbblog --zone=us-central1-b --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-us-central1-b --project=cdbblog --zone=us-central1-b --instances=cdb-cluster-node2

gcloud compute instance-groups unmanaged create ig-cdb-cluster-us-central1-c --project=cdbblog --zone=us-central1-c gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-us-central1-c --project=cdbblog --zone=us-central1-c --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-us-central1-c --project=cdbblog --zone=us-central1-c --instances=cdb-cluster-node3

gcloud compute instance-groups unmanaged create ig-cdb-cluster-europe-southwest1-a --project=cdbblog -–zone=europe-southwest1-a gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-europe-southwest1-a --project=cdbblog --zone=europe-southwest1-a --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-europe-southwest1-a --project=cdbblog --zone=europe-southwest1-a --instances=cdb-cluster-node4

gcloud compute instance-groups unmanaged create ig-cdb-cluster-europe-southwest1-b --project=cdbblog --zone=europe-southwest1-b gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-europe-southwest1-b --project=cdbblog --zone=europe-southwest1-b --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-europe-southwest1-b --project=cdbblog --zone=europe-southwest1-b --instances=cdb-cluster-node5

gcloud compute instance-groups unmanaged create ig-cdb-cluster-europe-southwest1-c --project=cdbblog --zone=europe-southwest1-c gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-europe-southwest1-c --project=cdbblog --zone=europe-southwest1-c --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-europe-southwest1-c --project=cdbblog --zone=europe-southwest1-c --instances=cdb-cluster-node6

gcloud compute instance-groups unmanaged create ig-cdb-cluster-southamerica-east1-a --project=cdbblog --zone=southamerica-east1-a gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-southamerica-east1-a --project=cdbblog --zone=southamerica-east1-a --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-southamerica-east1-a --project=cdbblog --zone=southamerica-east1-a --instances=cdb-cluster-node7

gcloud compute instance-groups unmanaged create ig-cdb-cluster-southamerica-east1-b --project=cdbblog --zone=southamerica-east1-b gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-southamerica-east1-b --project=cdbblog --zone=southamerica-east1-b --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-southamerica-east1-b --project=cdbblog --zone=southamerica-east1-b --instances=cdb-cluster-node8

gcloud compute instance-groups unmanaged create ig-cdb-cluster-southamerica-east1-c --project=cdbblog --zone=southamerica-east1-c gcloud compute instance-groups unmanaged set-named-ports ig-cdb-cluster-southamerica-east1-c --project=cdbblog --zone=southamerica-east1-c --named-ports=cdb-port:26257 gcloud compute instance-groups unmanaged add-instances ig-cdb-cluster-southamerica-east1-c --project=cdbblog --zone=southamerica-east1-c --instances=cdb-cluster-node9

Create Load Balancer

With all the instance groups created, we can work on a couple of other requirements for the Load Balancer: a firewall rule and a health check.

To determine if any of the instance group nodes are available or not, we need to create a health check to verify the port 8080 of the nodes (The Cockroach console is running against the 8080 port.

gcloud compute health-checks create tcp cdb-cluster-hc --project=cdbblog --port 8080

And the firewall is to allow the Load Balancer to perform the health check on the cluster nodes:

gcloud compute --project=cdbblog firewall-rules create cdb-cluster-lb-ingress --direction=INGRESS --priority=1000 --network=private-cdb-cluster-prod --action=ALLOW --rules=tcp:26257,tcp:8080 --source-ranges=34.117.211.236,130.211.0.0/22,35.191.0.0/16

Lets create a backend for the LB:

gcloud compute backend-services create cdb-tcp-lb --project=cdbblog --global-health-checks --global --protocol TCP --health-checks cdb-cluster-hc --timeout 5m --port-name tcp26257

Add all the instance groups to the backend:

gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-us-central1-a --instance-group-zone us-central1-a --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-us-central1-b --instance-group-zone us-central1-b --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-us-central1-c --instance-group-zone us-central1-c --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-europe-southwest1-a --instance-group-zone europe-southwest1-a --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-europe-southwest1-b --instance-group-zone europe-southwest1-b --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-europe-southwest1-c --instance-group-zone europe-southwest1-c --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-southamerica-east1-a --instance-group-zone southamerica-east1-a --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-southamerica-east1-b --instance-group-zone southamerica-east1-b --balancing-mode UTILIZATION --max-utilization 0.8 gcloud compute backend-services add-backend cdb-tcp-lb --project=cdbblog --global --instance-group ig-cdb-cluster-southamerica-east1-c --instance-group-zone southamerica-east1-c --balancing-mode UTILIZATION --max-utilization 0.8

Configure a target TCP proxy / Reserve a static IPv4 / Configure a global forwarding rule / Create route with the IP reserved for the LB:

Note: The last command is in beta, so it could not work in the future. Execute the step manually from the console if that is the case.

gcloud compute target-tcp-proxies create cdb-tcp-lb-target-proxy --project=cdbblog --backend-service cdb-tcp-lb --proxy-header NONE gcloud compute addresses create cdb-tcp-lb-static-ipv4 --project=cdbblog --ip-version=IPV4 --global gcloud compute forwarding-rules create cdb-tcp-lb-ipv4-forwarding-rule --project=cdbblog --global --target-tcp-proxy cdb-tcp-lb-target-proxy --address cdb-tcp-lb-static-ipv4 --ports 26257 gcloud beta compute routes create cdb-private-to-lb --project=cdbblog --network=private-cdb-cluster-prod --priority=1000 --destination-range=34.117.211.236 --next-hop-gateway=default-internet-gateway

The Load Balancer configuration is done. Proceed with the cluster creation.

First, create a certificate for each of the nodes specifying the private IP of the nodes and the IP for the LB. This is to secure the connection to the cluster. Use the bastion host to connect to each node and execute the following command. Please check your assigned IPs, they will not be the same as in the example:

root@cdb-cluster-node1:/cdb# cockroach cert create-node 10.14.1.4 cdb-cluster-node1 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node2:/cdb# cockroach cert create-node 10.14.2.4 cdb-cluster-node2 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node3:/cdb# cockroach cert create-node 10.14.3.3 cdb-cluster-node3 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node4:/cdb# cockroach cert create-node 10.20.1.3 cdb-cluster-node4 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node5:/cdb# cockroach cert create-node 10.20.2.3 cdb-cluster-node5 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node6:/cdb# cockroach cert create-node 10.20.3.3 cdb-cluster-node6 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node7:/cdb# cockroach cert create-node 10.50.1.3 cdb-cluster-node7 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node8:/cdb# cockroach cert create-node 10.50.2.3 cdb-cluster-node8 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key root@cdb-cluster-node9:/cdb# cockroach cert create-node 10.50.3.3 cdb-cluster-node9 localhost 127.0.0.1 34.117.211.236 --certs-dir=certs --ca-key=my-safe-directory/ca.key

All the nodes are now secure. Let’s start the cluster. The command to start the cluster will include the cluster name, the IP of all the joining nodes, and the locality. It will also specify the location of the data and temporal files. Execute the command on each of the nodes from the mount point /cdb:

root@cdb-cluster-node1:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.14.1.4 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=us-central1,zone=us-central1-a --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node2:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.14.2.4 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=us-central1,zone=us-central1-b --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node3:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.14.3.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=us-central1,zone=us-central1-c --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node4:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.20.1.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=europe-southwest1,zone=europe-southwest1-a --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node5:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.20.2.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=europe-southwest1,zone=europe-southwest1-b --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node6:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.20.3.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=europe-southwest1,zone=europe-southwest1-c --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node7:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.50.1.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=southamerica-east1,zone=southamerica-east1-a --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node8:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.50.2.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=southamerica-east1,zone=southamerica-east1-b --store=/cdb_data --temp-dir=/cdb_logs root@cdb-cluster-node9:/cdb# cockroach start --certs-dir=certs --advertise-addr=10.50.3.3 --cluster-name="CDBPYTHIAN" --join=10.14.1.4,10.14.2.4,10.14.3.3,10.20.1.3,10.20.2.3,10.20.3.3,10.50.1.3,10.50.2.3,10.50.3.3 --cache=.25 --max-sql-memory=.25 --background --locality=region=southamerica-east1,zone=southamerica-east1-c --store=/cdb_data --temp-dir=/cdb_logs

Configure CDB Cluster

Connect to Node 1 to create root certificate:

root@cdb-cluster-node1:/cdb# cockroach cert create-client root --certs-dir=certs --ca-key=my-safe-directory/ca.key

From Node 1 where root certificate is located:

root@cdb-cluster-node1:/cdb# cockroach init --certs-dir=certs --host=10.14.1.4 --cluster-name="CDBPYTHIAN" Cluster successfully initialized

Test the connection to the cluster using the LB IP:

root@cdb-cluster-node1:/cdb# cockroach sql --certs-dir=certs --host=34.117.211.236 # # Welcome to the CockroachDB SQL shell. # All statements must be terminated by a semicolon. # To exit, type: \q. # # Server version: CockroachDB CCL v22.1.8 (x86_64-pc-linux-gnu, built 2022/09/29 14:21:51, go1.17.11) (same version as client) # Cluster ID: 9c34c89a-5e8c-4cc0-9a4e-9dffb72f5350 # # Enter \? for a brief introduction. # root@34.117.211.236:26257/defaultdb> show database; database ------------- defaultdb (1 row) Time: 4ms total (execution 1ms / network 3ms)

Check the Cluster Nodes:

root@cdb-cluster-node1:/cdb# cockroach node status --host=10.14.1.4 --certs-dir=certs id | address | sql_address | build | started_at | updated_at | locality | is_available | is_live -----+-----------------+-----------------+---------+----------------------------+----------------------------+-----------------------------------------------------+--------------+---------- 1 | 10.14.1.4:26257 | 10.14.1.4:26257 | v22.1.8 | 2022-10-28 02:37:15.882796 | 2022-10-28 02:37:51.907174 | region=us-central1,zone=us-central1-a | true | true 2 | 10.20.1.3:26257 | 10.20.1.3:26257 | v22.1.8 | 2022-10-28 02:37:16.327332 | 2022-10-28 02:37:52.399986 | region=europe-southwest1,zone=europe-southwest1-a | true | true 3 | 10.14.3.3:26257 | 10.14.3.3:26257 | v22.1.8 | 2022-10-28 02:37:16.425969 | 2022-10-28 02:37:52.449046 | region=us-central1,zone=us-central1-c | true | true 4 | 10.50.2.3:26257 | 10.50.2.3:26257 | v22.1.8 | 2022-10-28 02:37:16.608995 | 2022-10-28 02:37:52.698948 | region=southamerica-east1,zone=southamerica-east1-b | true | true 5 | 10.20.3.3:26257 | 10.20.3.3:26257 | v22.1.8 | 2022-10-28 02:37:17.276413 | 2022-10-28 02:37:53.347691 | region=europe-southwest1,zone=europe-southwest1-c | true | true 6 | 10.20.2.3:26257 | 10.20.2.3:26257 | v22.1.8 | 2022-10-28 02:37:17.901019 | 2022-10-28 02:37:49.471961 | region=europe-southwest1,zone=europe-southwest1-b | true | true 7 | 10.50.3.3:26257 | 10.50.3.3:26257 | v22.1.8 | 2022-10-28 02:37:19.393231 | 2022-10-28 02:37:50.98388 | region=southamerica-east1,zone=southamerica-east1-c | true | true 8 | 10.14.2.4:26257 | 10.14.2.4:26257 | v22.1.8 | 2022-10-28 02:37:19.472042 | 2022-10-28 02:37:50.990753 | region=us-central1,zone=us-central1-b | true | true 9 | 10.50.1.3:26257 | 10.50.1.3:26257 | v22.1.8 | 2022-10-28 02:37:19.636315 | 2022-10-28 02:37:51.225812 | region=southamerica-east1,zone=southamerica-east1-a | true | true (9 rows)

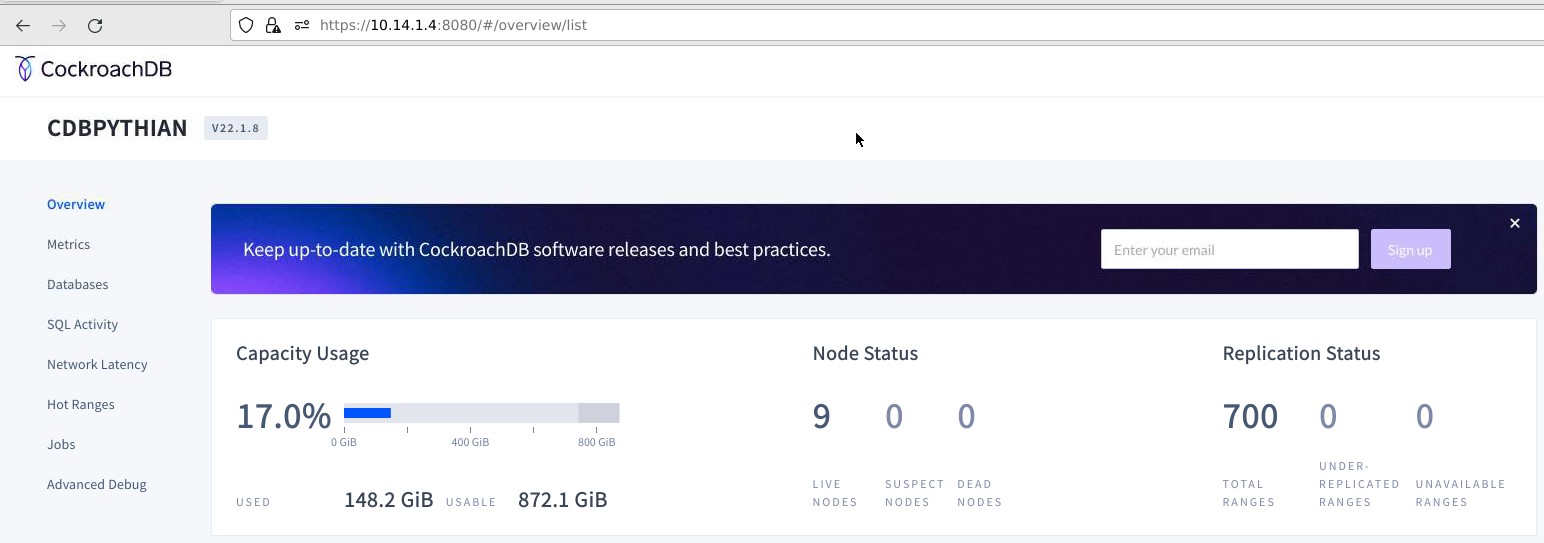

To access the web console, set up a VNC session to the bastion host and connect to any of the nodes on port 8080.

Before you will need to create a console user from the DB side and grant the “ADMIN” role:

root@10.14.1.4:26257/defaultdb> create user dba with password 'admin'; CREATE ROLE Time: 1.947s total (execution 1.947s / network 0.000s) root@10.14.1.4:26257/defaultdb> grant admin to dba; GRANT

On the console front page, we can see the Node status, replications status, and the capacity usage of the cluster and nodes:

The Cluster is up and running with all the nodes. We can now start playing around with it.

In the next chapter of the blog, we will change the replication and test how it behaves when losing nodes. We will also test transactions and how the load is balanced across the nodes.

Stay tuned.