How to Send Custom Emails and Notifications In Azure Data Factory

If you’ve used Azure Data Factory (ADF), you’ve probably sent email alerts. But these don’t provide any clarity or customization in the message subject line or body. How do you send an email from the ADF pipeline with a custom message while using Gmail as the email provider?

The problem

I recently tried sending a pipeline completion email to a consumer after the data load process was complete. Initially, I tried using built-in alerts to achieve the same, but that didn’t allow for any customizations and further added confusion because there was no way to distinguish between failure alerts and completion alerts.

To send out a custom alert, I had to develop a solution using a Logic App and with Web Activity in Azure Data Factory (ADF), call the logic app to send the email with a custom subject line and body. In this post, we’ll develop a solution to send an automatic email that alerts you when a pipeline fails, succeeds or ends.

The solution

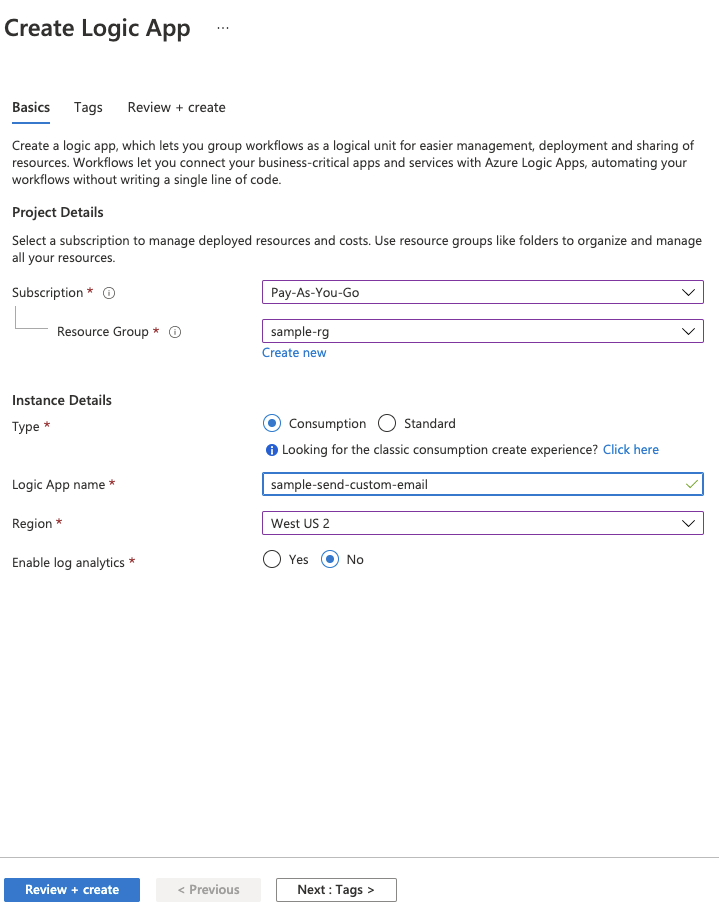

First, you’ll need to create a Logic App. Click “Create a resource” on the left corner of your Azure Portal. Next, search for “Logic App” and click “Create”.

Choose a name, subscription, resource group and location. You can turn log analytics on or off based on your requirement.

How do you decide which resource type? Will you be using a Consumption or Standard resource type? Here are some differences between the two:

| Resource Type | Logic Apps (Consumption) | Logic Apps (Standard) |

|---|---|---|

| Environment | Runs on Multi-Tenant environment or Dedicated ISE | Single-tenant model, meaning no sharing of resources like compute power to Logic Apps from other tenants |

| Pricing Model | Pay-for-what-you-use | Based on a hosting plan with a selected pricing tier |

| # of Workflows | One | Multiple |

| Operational Overhead | Fully managed | More control and fine-tuning capability around runtime and performance settings |

| Limit Management | Azure Logic Apps manages the default values for these limits, but you can change some of these values, if that option exists for a specific limit. | You can change the default values for many limits, based on your scenario’s needs. |

| Support Containerization | No | Yes |

I recommend using the Consumption resource type for this particular Logic App as we’ll be triggering this only in case of a pipeline failure/success/completion.

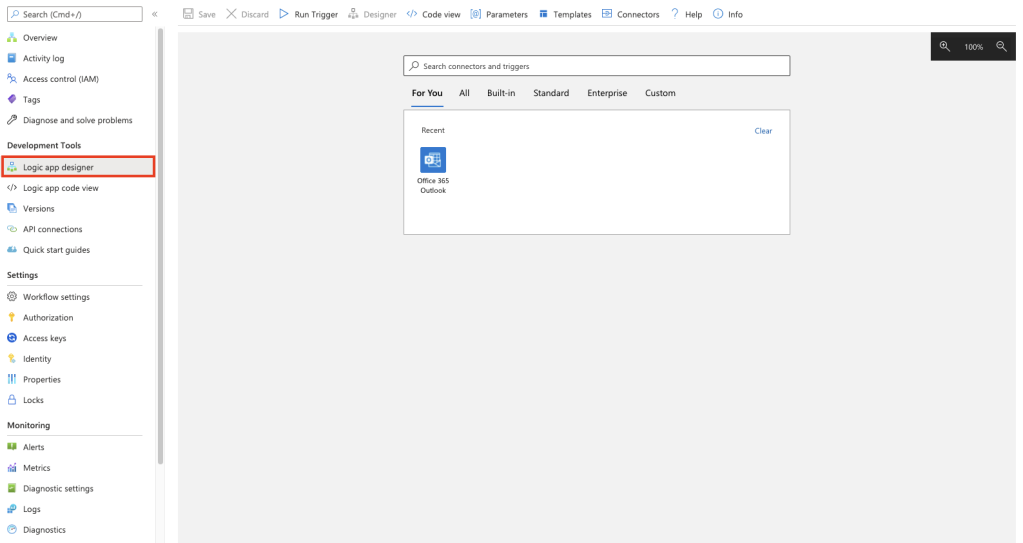

When the Logic App is deployed and ready to use, you’ll retrieve a notification to help you navigate to it. To develop your Logic App click on “ Logic App Designer” under Development Tools and a new blade to design your app will open.

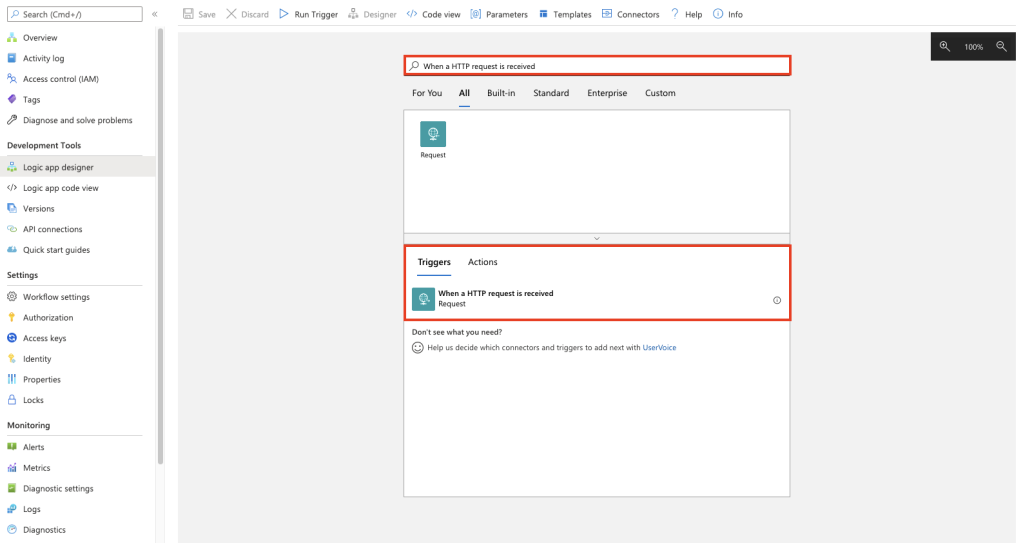

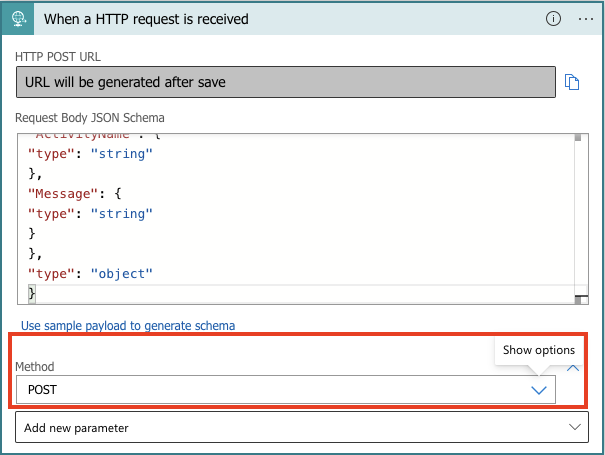

Search for the “When a HTTP request is received” template and select the trigger as your first step:

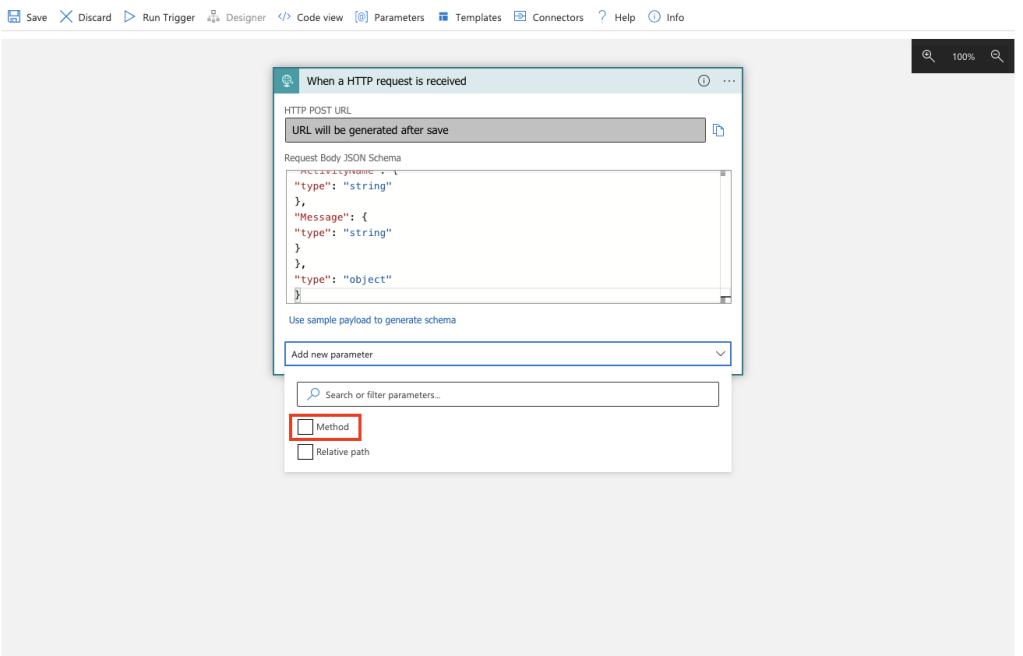

From the trigger select “Method” from “Add new parameter”:

Choose “POST” for the method:

Paste the below JSON in the Request Body JSON Schema

{

"properties": {

"EmailTo": {

"type": "string"

},

"Subject": {

"type": "string"

},

"FactoryName": {

"type": "string"

},

"PipelineName": {

"type": "string"

},

"ActivityName": {

"type": "string"

},

"Message": {

"type": "string"

}

},

"type": "object"

}Below is the list of properties passed as JSON body:

| EmailTo | The email address of the receiver. Will be passed as user defined Parameter from ADF. |

| Subject | Custom subject for the email. Will be passed as user defined Parameter from ADF. |

| FactoryName | Data Factory Name. Will be passed as a built-in parameter from ADF. |

| PipelineName | Pipeline Name. Will be passed as a built-in parameter from ADF. |

| ActivityName | Activity Name in the pipeline that has failed or completed. Will be passed as user defined Parameter from ADF. |

| Message | Custom Body of the email. Will be passed as user defined Parameter from ADF. |

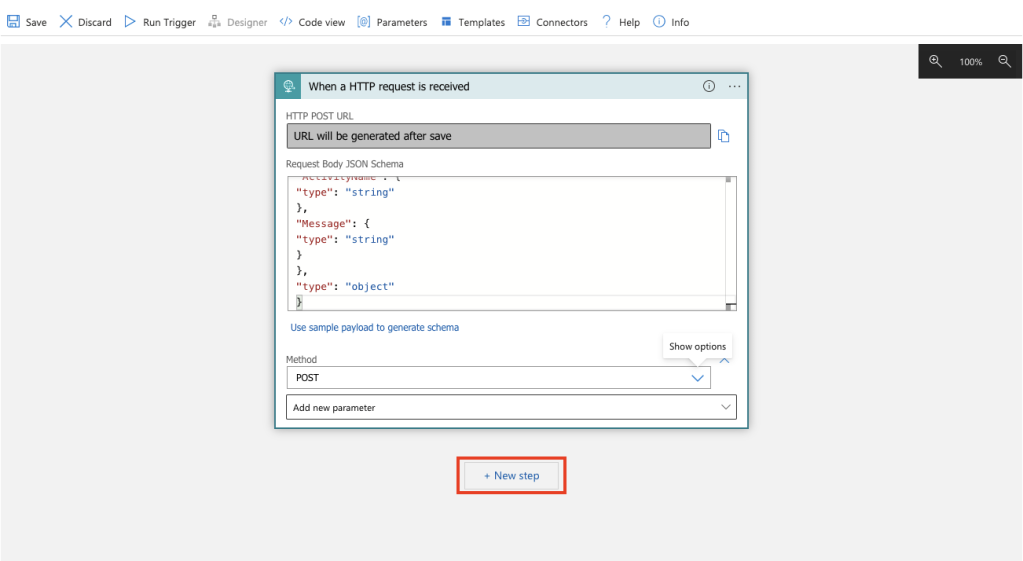

Next, we’ll add a new step to Logic App and send an email:

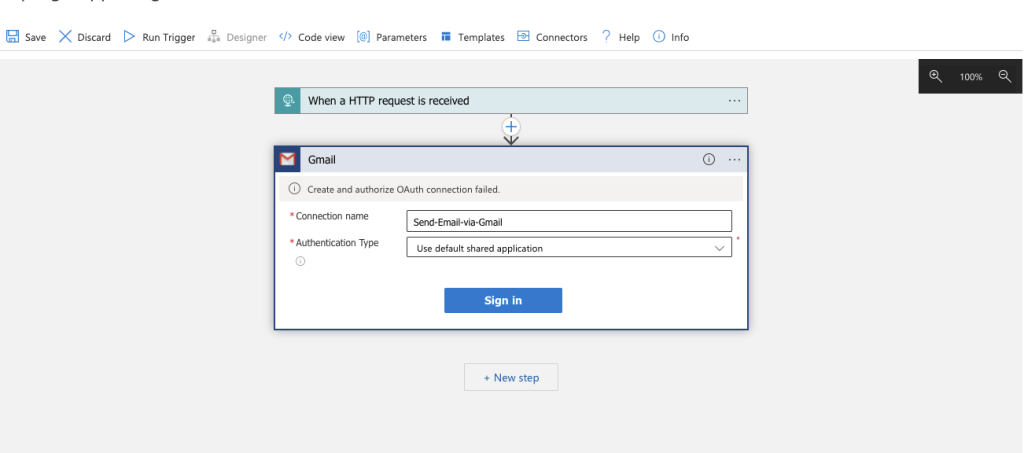

Search for “Send email (V2)” for the new operation and locate a provider. I’ll be using Gmail, but you can use another email provider, such as Outlook:

Enter the connection name and use “Authentication Type” as a default shared application:

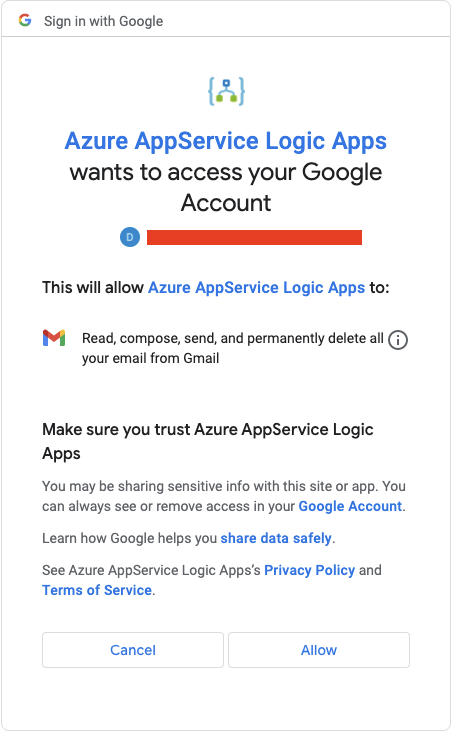

Once you click on “Sign In” you’ll be redirected to authenticate using your Google account credentials. Once you sign in, click “This will allow Azure AppService…” and your connection will be established successfully:

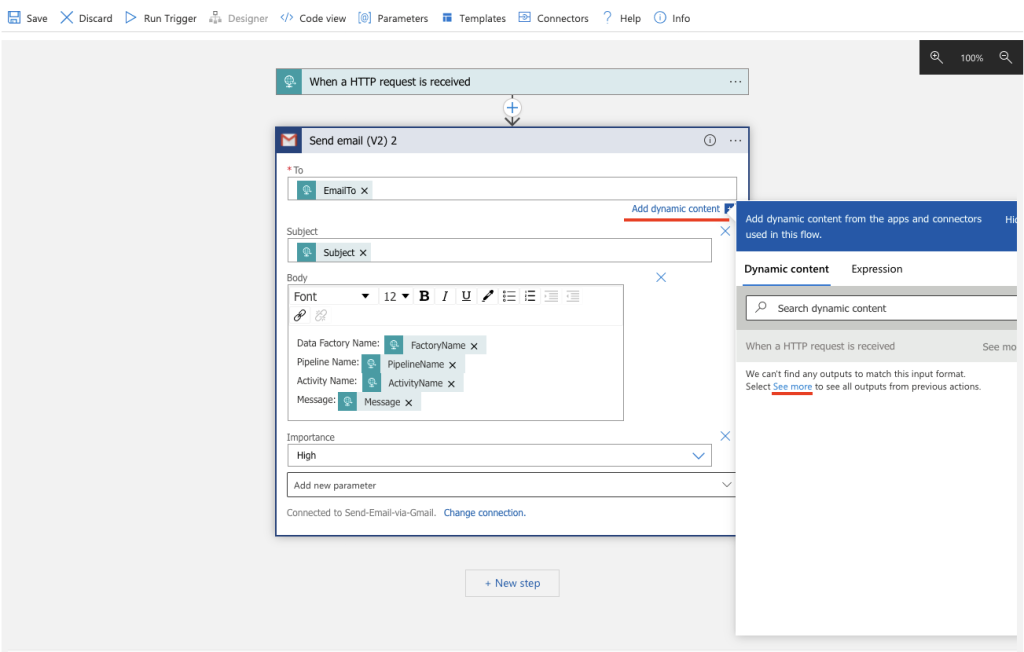

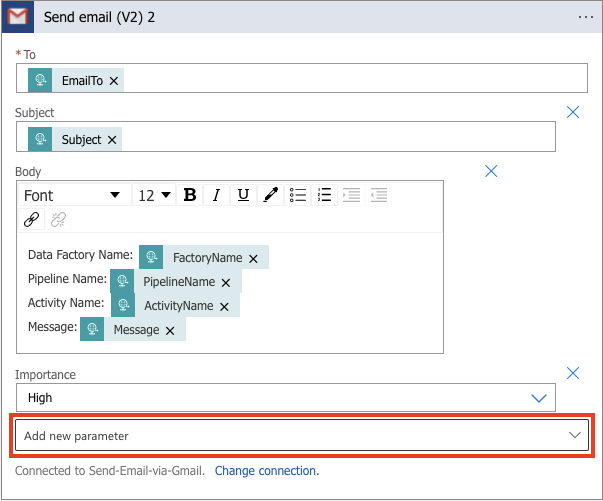

Add new parameters for Subject, Body and Importance by using the “Add new parameter” drop-down. Fill in details using dynamic content based on the JSON schema we added in our start trigger. Click “See more” to do this.

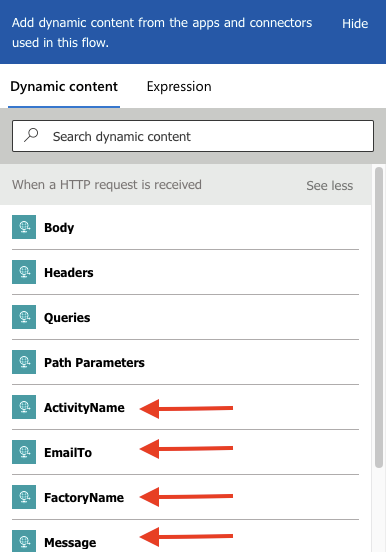

You’ll see all the available variables as shown below:

Once you have added all the variables and parameters your step should look like below:

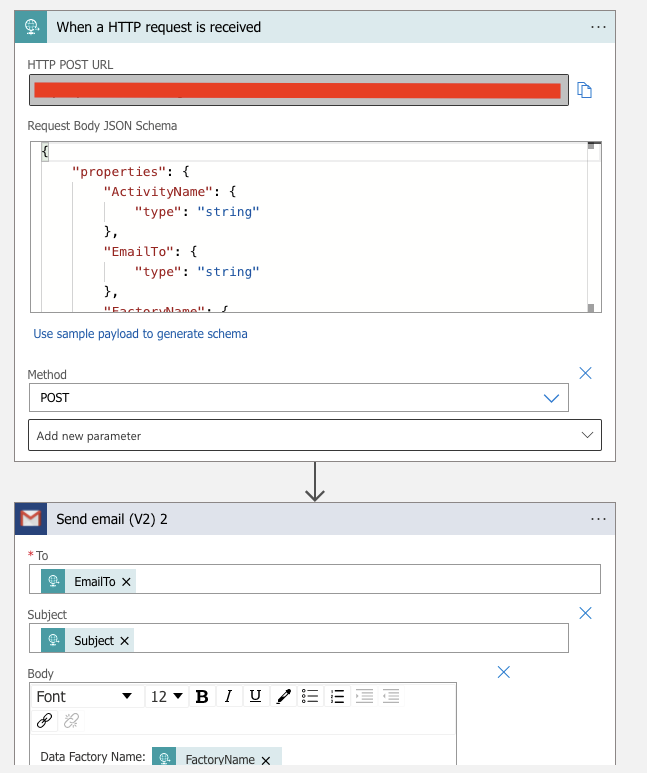

Once you save, the HTTP POST URL will be generated. Copy the URL and make a note of it as this will be used in the ADF pipeline to send email using the Web Activity URL:

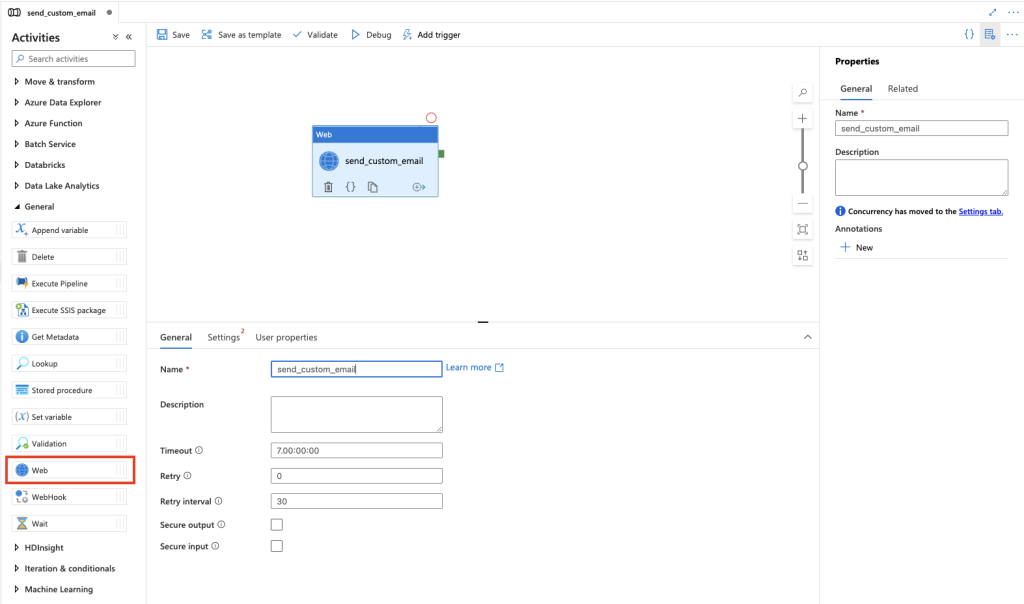

Next, we’ll create a sample ADF pipeline to send custom alerts by triggering the Logic App. For this, we’ll need to use Activities > General > Web activity:

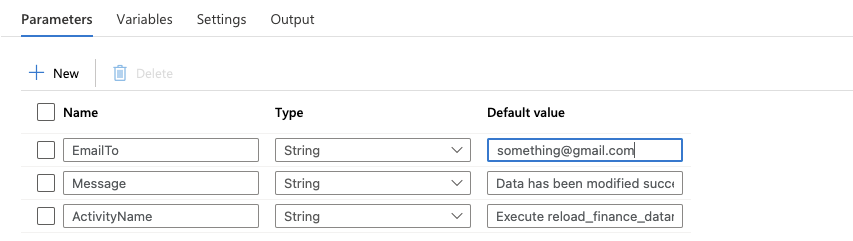

Then, we add parameters to the pipeline that will be passed to the HTTP POST request. Below is the list of parameters you’ll need to add and others can be populated from built-in system variables:

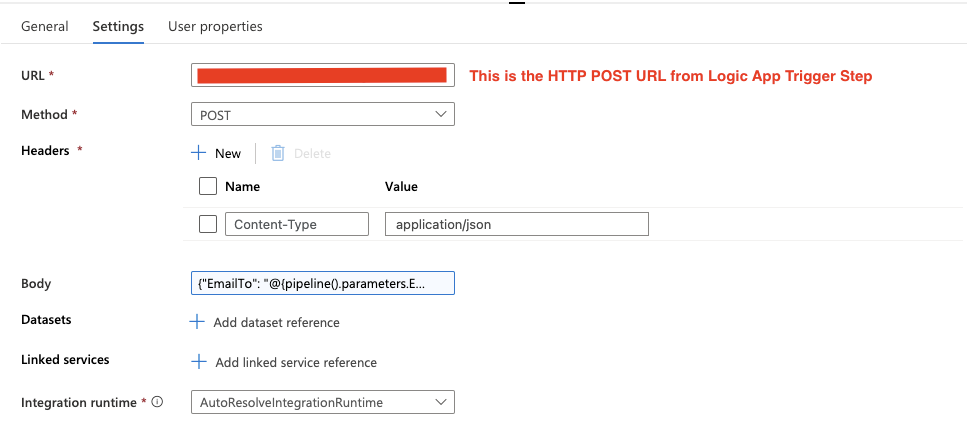

Navigate to Settings for Web activity and paste the HTTP POST URL copied from Logic App Trigger step.

Select Method and type “POST”.

For Headers, use the following settings:

| Name | Value |

| Content-Type | application/json |

For Body, use the following dynamic content. As you can see below, we’ve used both System Variables and Parameters which will be passed to the Logic App Trigger:

{"EmailTo": "@{pipeline().parameters.EmailTo}","Subject": "Reload for @{pipeline().Pipeline}-pipeline completed","FactoryName": "@{pipeline().DataFactory}","PipelineName": "@{pipeline().Pipeline}","ActivityName": "@{pipeline().parameters.ActivityName}","Message": "@{pipeline().parameters.Message}"}

After adding all the details in the Settings tab, select the integration runtime you want to use and your populated settings should look like below image:

Save and debug and you should receive the custom message in your email:

Now that we have successfully tested the activity, we can copy and paste the activity and parameters in our Data pipelines and use this to send custom alerts from ADF.

Have any questions about using ADF to send emails? Drop me a line in the comments.

Don’t forget to sign up for more updates here.