Improve parsing and query performance - fix Oracle's fixed object statistics

What do I mean by 'fix' the the fixed object statistics? Simply gather statistics to help the optimizer. What are 'fixed objects'? Fixed objects are the x$ tables and associated indexes that data dictionary views are based on. In this case we are interested in the objects that make up the v$sqlstats and v$sql_bind_capture views. If you've never before collected statistics on Oracle fixed object, you may be wondering why you should bother with it, as everything appears to be fine in your databases. After seeing an example you may want to schedule a time to collect these statistics.

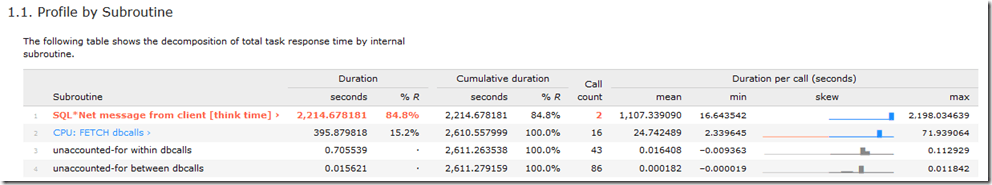

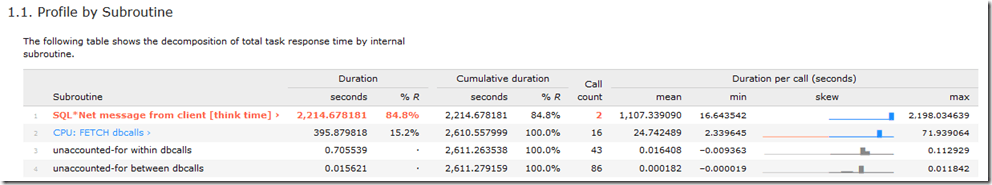

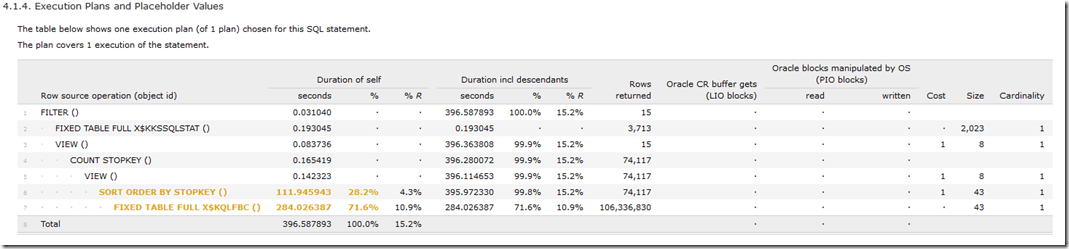

The 'SQL*Net message from client' event can be ignored; most of that time was accumulated waiting for me to come back and exit sqlplus. While the script example shows that the 10046 trace was turned off and the session exited, I had forgot to include those two line for this first run. No matter, as the interesting bit is the next line, 'CPU: FETCH dbcalls'. More than 6 minutes was spent fetching a few rows, so clearly something was not quite right. The SQL plan in the profile showed what the problem was, as the execution plan was far less than optimal. The following is the execution plan from AWR data:

The 'SQL*Net message from client' event can be ignored; most of that time was accumulated waiting for me to come back and exit sqlplus. While the script example shows that the 10046 trace was turned off and the session exited, I had forgot to include those two line for this first run. No matter, as the interesting bit is the next line, 'CPU: FETCH dbcalls'. More than 6 minutes was spent fetching a few rows, so clearly something was not quite right. The SQL plan in the profile showed what the problem was, as the execution plan was far less than optimal. The following is the execution plan from AWR data:

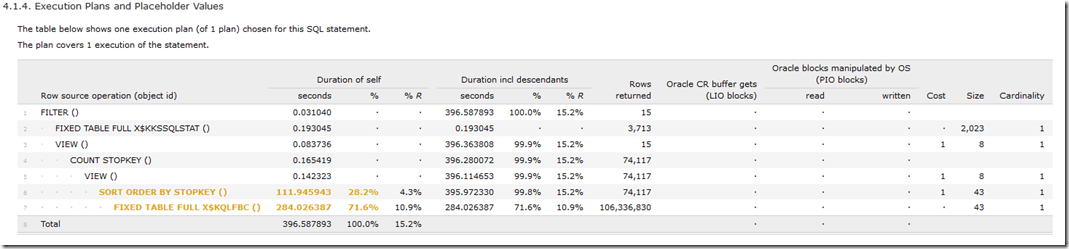

Yikes! There were 106.3E6 rows returned from from X$KQLFBC.

Yikes! There were 106.3E6 rows returned from from X$KQLFBC.

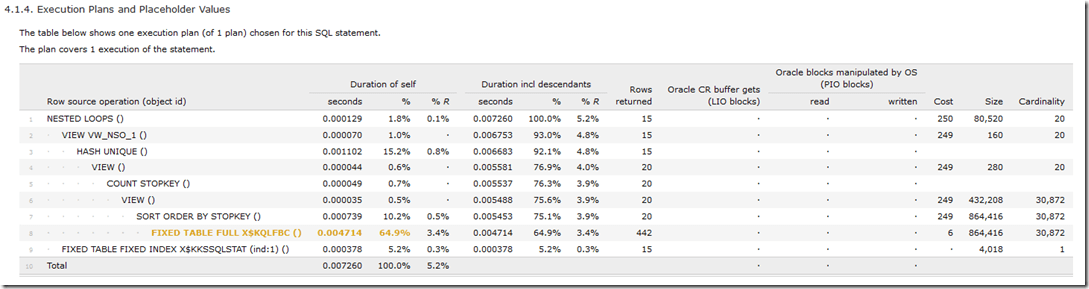

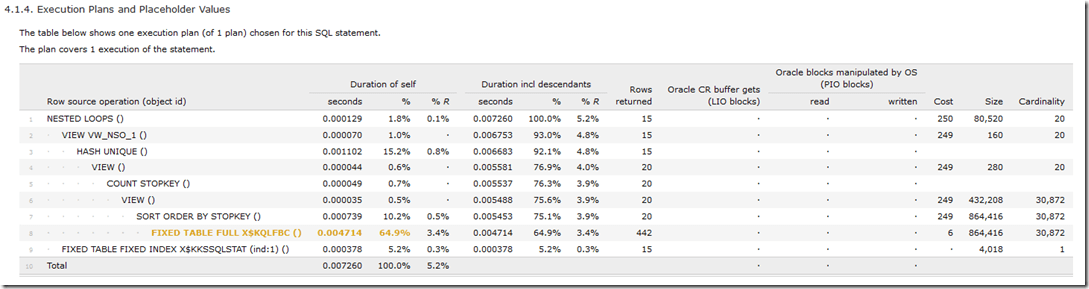

The 0.0047 seconds required to return 442 rows from X$KQLFBC was quite a reduction from the previously seen time of nearly 396 seconds.

The 0.0047 seconds required to return 442 rows from X$KQLFBC was quite a reduction from the previously seen time of nearly 396 seconds.

Searching for SQL

Quite recently I was looking for recently executed SQL, based on the most recently captured bind variables.

[sourcecode language="sql" padlinenumbers="true"] select sql_id, sql_fulltext from v$sqlstats where sql_id in ( select distinct sql_id from ( select sql_id, last_captured from ( select sql_id, last_captured from V$SQL_BIND_CAPTURE order by last_captured desc nulls last ) where rownum <= 20 ) ) [/sourcecode]

I ran the query and was distracted for a few moments. When I next looked at the terminal session where this SQL was executing, no rows had yet been returned. Thinking that maybe 'SET PAUSE ON' had been run, I pressed ENTER. Nothing. From another session I checked for waits in v$session_wait. Nothing there either. If the session is not returning rows, and not registering and event in v$session_wait, then it must be on CPU. This didn't seem an ideal situation, and so I stopped the query with CTRL-C. The next step was to run the query on a smaller and not very busy 11.2.0.2 database. This time I saw that rows were being returned, but very slowly. So now it was time to trace the execution and find out what was going on.

[sourcecode language="bash"] alter session set tracefile_identifier='JKSTILL'; set linesize 200 trimspool on alter session set events '10046 trace name context forever, level 12'; select sql_id, sql_fulltext from v$sqlstats where sql_id in ( select distinct sql_id from ( select sql_id, last_captured from ( select sql_id, last_captured from V$SQL_BIND_CAPTURE order by last_captured desc nulls last ) where rownum <= 20 ) ) / alter session set events '10046 trace name context off'; exit [/sourcecode]

Coming back to this several minutes later, the resulting trace file was processed with the Method R Profiler to find out just where the time was going.

The 'SQL*Net message from client' event can be ignored; most of that time was accumulated waiting for me to come back and exit sqlplus. While the script example shows that the 10046 trace was turned off and the session exited, I had forgot to include those two line for this first run. No matter, as the interesting bit is the next line, 'CPU: FETCH dbcalls'. More than 6 minutes was spent fetching a few rows, so clearly something was not quite right. The SQL plan in the profile showed what the problem was, as the execution plan was far less than optimal. The following is the execution plan from AWR data:

The 'SQL*Net message from client' event can be ignored; most of that time was accumulated waiting for me to come back and exit sqlplus. While the script example shows that the 10046 trace was turned off and the session exited, I had forgot to include those two line for this first run. No matter, as the interesting bit is the next line, 'CPU: FETCH dbcalls'. More than 6 minutes was spent fetching a few rows, so clearly something was not quite right. The SQL plan in the profile showed what the problem was, as the execution plan was far less than optimal. The following is the execution plan from AWR data:

[sourcecode language="sql"] 1 select * 2 from TABLE( 3 dbms_xplan.display_awr(sql_id => :sqlidvar, plan_hash_value => 898242479, format => 'ALL ALLSTATS LAST') 4* ) sys@oravm1 SQL- / PLAN_TABLE_OUTPUT ----------------------------------------------------------------------------------------------------------------- SQL_ID 4h7qfxa9t1ukz -------------------- select sql_id, sql_fulltext from v$sqlstats where sql_id in ( select distinct sql_id from ( select sql_id, last_captured from ( select sql_id, last_captured from V$SQL_BIND_CAPTURE order by last_captured desc nulls last ) where rownum <= 20 ) ) Plan hash value: 898242479 ------------------------------------------------------------------------------------------- | Id | Operation | Name | E-Rows |E-Bytes| Cost (%CPU)| E-Time | ------------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | | | 1 (100)| | | 1 | FILTER | | | | | | | 2 | FIXED TABLE FULL | X$KKSSQLSTAT | 1 | 2023 | 0 (0)| | | 3 | VIEW | | 1 | 8 | 1 (100)| 00:00:01 | | 4 | COUNT STOPKEY | | | | | | | 5 | VIEW | | 1 | 8 | 1 (100)| 00:00:01 | | 6 | SORT ORDER BY STOPKEY| | 1 | 43 | 1 (100)| 00:00:01 | | 7 | FIXED TABLE FULL | X$KQLFBC | 1 | 43 | 0 (0)| | ------------------------------------------------------------------------------------------- PLAN_TABLE_OUTPUT ----------------------------------------------------------------------------------------------------------------- Query Block Name / Object Alias (identified by operation id): ------------------------------------------------------------- 1 - SEL$88122447 2 - SEL$88122447 / X$KKSSQLSTAT@SEL$4 3 - SEL$6 / from$_subquery$_002@SEL$5 4 - SEL$6 5 - SEL$FEF91251 / from$_subquery$_003@SEL$6 6 - SEL$FEF91251 7 - SEL$FEF91251 / X$KQLFBC@SEL$10 Note ----- - Warning: basic plan statistics not available. These are only collected when: * hint 'gather_plan_statistics' is used for the statement or * parameter 'statistics_level' is set to 'ALL', at session or system level 39 rows selected. [/sourcecode]

While useful, this plan is not giving much information about why this took so long. If pressed I would just whip up a Bash and Awk one-liner to parse the trace files and find out where this time was going. In this case though I could just consult the Method R profile again.

Yikes! There were 106.3E6 rows returned from from X$KQLFBC.

Yikes! There were 106.3E6 rows returned from from X$KQLFBC.

Collecting the Fixed Object Statistics

Rather than spend time analyzing this further, it seemed that here was a clear case for collecting statistics on fixed objects in the database. The following SQL was run:

[sourcecode language="sql"] exec dbms_stats.gather_fixed_objects_stats [/sourcecode]

The next step was to rerun the query. This time it ran so quickly I wondered if it had even worked. As before, tracing had been enabled, and a profile generated from the trace. There was now quite an improvement seen in the execution plan:

The 0.0047 seconds required to return 442 rows from X$KQLFBC was quite a reduction from the previously seen time of nearly 396 seconds.

The 0.0047 seconds required to return 442 rows from X$KQLFBC was quite a reduction from the previously seen time of nearly 396 seconds.