Obtaining an active-passive ProxySQL on FreeBSD

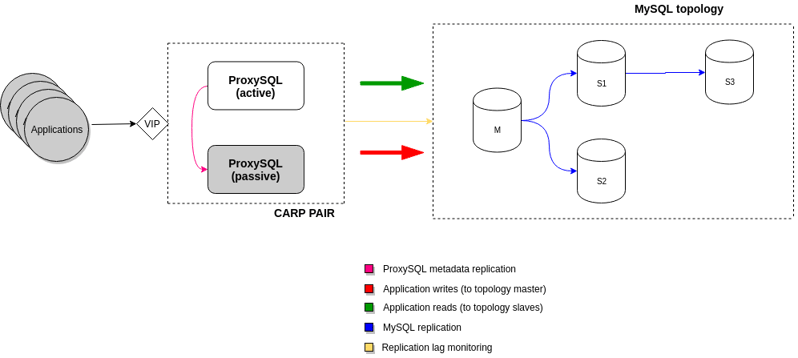

When designing a highly-available MySQL architecture, having a proxy to route the traffic to the appropriate instances is crucial to achieve transparent (or almost) failovers/switchovers. ProxySQL, a popular open source, SQL-level proxy is a great choice for this, and even though an experimental clustering feature is available, we could follow a simpler active-passive approach too, based on Common Address Redundancy Protocol (CARP), which will also provide a highly available IP for the applications to connect.

When designing a highly-available MySQL architecture, having a proxy to route the traffic to the appropriate instances is crucial to achieve transparent (or almost) failovers/switchovers. ProxySQL, a popular open source, SQL-level proxy is a great choice for this, and even though an experimental clustering feature is available, we could follow a simpler active-passive approach too, based on Common Address Redundancy Protocol (CARP), which will also provide a highly available IP for the applications to connect.

Solution overview

As mentioned before, this setup relies on a shared IP configured through CARP on the two hosts that will be running ProxySQL. CARP configuration is outside the scope of this post but the steps are on available on the FreeBSD documentation. We then need to make sure all ProxySQL configuration changes performed on the active node are replicated to the standby; since sqlite is used to store the configuration, I opted for shipping online sqlite backups from the active to the passive node. Finally, we needed a way to connect the CARP VIP and ProxySQL together so when a role transition occurs, ProxySQL would follow. The device state change daemon (devd) allows executing specific actions upon certain events, which is exactly what we need. In the following sections, I'll provide additional details for each part of the solution.

In the following sections, I'll provide additional details for each part of the solution.

ProxySQL metadata replication

To replicate ProxySQL metadata, we basically copy online sqlite backups between nodes every minute through SSH. Since ProxySQL metadata is rather small and doesn't change often, this is a feasible approach. A dedicated OS user and passwordless SSH configured between the ProxySQL boxes will be sufficient. Backups are taken using sqlite3.backupsqlite3.backup command which generates an online copy of the sqlite database. Finally, scp is used to copy the backup to the opposite node. The backup/copy script is added to cron when the node is promoted to active. A purge script is also added to the node acting as standby to remove old backup files.Device state change daemon (devd)

The devd daemon will be used to execute bash scripts on certain CARP events. Namely, when a specific CARP interface changes its state (Master -> Backup or Backup -> Master). On FreeBSD 11, the devd configuration file is /etc/devd/carp.conf and has the following structure: each event or statement has a type ( notify in this case) and it is represented by a block in curly brackets. notify is used when we want to trigger specific actions based on kernel event notifications sent to user land. The number next to the statement type determines the priority (0 being the lowest) in the case that two statements match the same event. Finally, the sub-statement clauses within the block determine the event characteristics and the associated actions. On the example below, the first block corresponds to any CARP events where the VIP at em0 interface, with the virtual host id (vhid) 9, switches to master state. When this happens, the psqlrestore.sh script will be executed, passing type and subsystem as parameters. Also, a message will be logged into the system log (/var/log/messages). The second block is similar to the former, but the disablepsqlbkp.sh script is executed when the CARP VIP transitions to the BACKUP state instead.notify 30 {

match "system" "CARP";

match "type" "(MASTER)";

match "subsystem" "9@em0";

action "/root/psqlrestore.sh $type $subsystem";

action "logger -p local0.notice -t CARP-PROXYSQL '$subsystem role is MASTER. Restoring ProxySQL configuration '";

};

notify 30 {

match "system" "CARP";

match "type" "(BACKUP)";

match "subsystem" "9@em0";

action "/root/disablepsqlbkp.sh $type $subsystem";

action "logger -p local0.notice -t CARP-PROXYSQL '$subsystem role is BACKUP. Disabling ProxySQL backups'";

};

All components working together

Under normal conditions, the master node will be copying online sqlite backups to the standby node through SSH. Those backups will accumulate on a specific directory and the same script will take care of keeping the number of files under control. Upon failover/switchover, the following will happen:1) A CARP role switch is triggered whether manually or because of a host failure.

2) devd detects the change in the vhid VIP role and triggers the restore script.

3) This script finds the latest sqlite backup, copies it to the ProxySQL data directory, set the right privileges and starts the ProxySQL service.

4) The script will also enable a cron task to start shipping backups to the opposite node.

5) If it was a switchover and the old master node is alive, devd will execute a script that will disable sqlite backups (disablepsqlbkp.sh) and enable a received backups purge task.

Next steps

Even though this solution was tested successfully, points such as the following need to be considered before deploying it in production:- Add logic to prevent corrupted backup restores to affect failover.

- Proper sqlite backups monitoring.

- Proper monitoring to ensure that the CARP role and the ProxySQL state are not opposite.