How EBS Concurrent Processing Should Run on Oracle RAC

Suggested audience: Oracle Apps DBAs, Oracle e-Business Suite technical architects, and Oracle ATG team.

Introduction

In this post, I describe the Oracle E-Business Suite (EBS) concurrent processing configuration options currently available in an Oracle Real Application Cluster (RAC) environment and share my views on how to improve it. My views are very close to enhancement request 4159920 APPSRAP:PCP/CONCURRENT MANAGER – RAC NODE AFFINITY & LISTENER LOAD BALANCING, which was originally submitted by Dell engineers in 2005. Please feel free to share your opinion on the PCP & RAC set up in the comments section below. Let me know if you share my vision and if it makes sense to you. Your opinion is important to me, and I will use it in further conversations with the Oracle ATG team. If you have time and think that your organization would benefit from enhanced functionality, please log an SR with Oracle support asking them to make 4159920 enhancement request a priority and add your business case. I hope you love EBS as much as I do and want to make it even better :)

What is wrong with the current options?

RAC was introduced for two main reasons: a) Performance (Scalability) and b) Availability. An application that runs on RAC should provide good opportunities to leverage performance and ensure controlled fail-over in case of problems with any of RAC nodes (read – availability). EBS components should switch to available nodes based on a well-designed and implemented fail-over plan. Below is a list of options available today for configuring the concurrent processing components in a RAC environment. If there is enough interest, I will provide more details on each of the options in a separate blog post. Please let me know if you’re interested using the comments section below.

- Today, the default option in EBS leverages < environment name >_BALANCE tns alias and load balances all services across all available database nodes [MOS Note ID 823587.1].

- While this option works well for a small EBS implementation with over-provisioned hardware , it could lead to significant performance penalties in more active or less optimized environments. If we spread functionality across all available RAC nodes without functional partitioning, there is a very good chance that the interconnect network between database nodes will get overloaded, which will significantly reduce RAC performance benefits and, in some extreme cases, lead to negative performance improvements (single instance processes load faster than several instances in a RAC configuration).

- Parallel Concurrent Processing (PCP) enables you to specify a tns alias per application node via s_cp_twotask context file parameter. This way, if there is a higher or equal number of application nodes than there are database instances, we could point each application node to its own database instance and ensure functional partitioning. This separates different application modules by assigning each concurrent manager to its own application node using “Primary Node” parameter (e.g. payroll, human resources, financials to be executed on a separate application, and corresponding RAC node).

- This way we have better control over RAC interconnect load by ensuring that application functionality using the same data runs on the same RAC node.

- In case of one database node’s failure, EBS switches all the concurrent managers executed on an associated application node to a node specified by “Secondary Node” parameter for each concurrent manager. Please note that this fail-over process works if “Concurrent:PCP Instance Check” profile value is set to ON value.

- This method has one significant disadvantage. You need an equal number of application nodes and RAC nodes or higher. This wasn’t originally a big issue because most EBS customers executed concurrent managers on database nodes. In doing so, if a database node went down, it would stop application processes associated with that node, and PCP would migrate those processes to another concurrent processing node. Today, because most processing happens on the database side, there are often fewer application nodes than RAC nodes.

- If an organization wants to benefit from application partitioning, it could implement the solution I describe under point 3 (below) or introduce, at a minimum, the same number of application nodes as RAC nodes. To save on hardware resources, application nodes could be virtualized; however, this doesn’t reduce the amount of operational effort needed to manage those nodes (e.g. patching).

- In R12.1.3 EBS, Oracle has introduced “Target Instance” concurrent program level parameter (navigation path: Concurrent => Program => Define => Search => Session Control). This allows you to specify a preferable RAC instance on a specific concurrent program level. Oracle will try to execute associated with the concurrent program requests on the RAC node. If it is impossible, it will execute it in any available node (switching to the default mode).

- This seems to be a very good option compared to other options we have talked about. It lets you assign a specific RAC node to all Payroll or Finance programs, which ensures that they are executed on a specific node, reduces interconnect load, and leverages RAC performance benefits.

- There are a few disadvantages to this approach. One of them is the controlled fail-over option I talked about earlier in this blog post. If a RAC node fails, there isn’t a good control where each concurrent request is executed. This may trigger a bit more chaos where it is least expected. One of the ways to mitigate this problem is to update the “Target Instance” parameter immediately after the node failure using a half-automated script; however, it requires customization so it may not be a well supported option.

- The other challenge with this option is the complex maintenance. There are hundreds of concurrent programs, and it could be very time consuming to plan and assign RAC nodes to each or some of them.

Solution

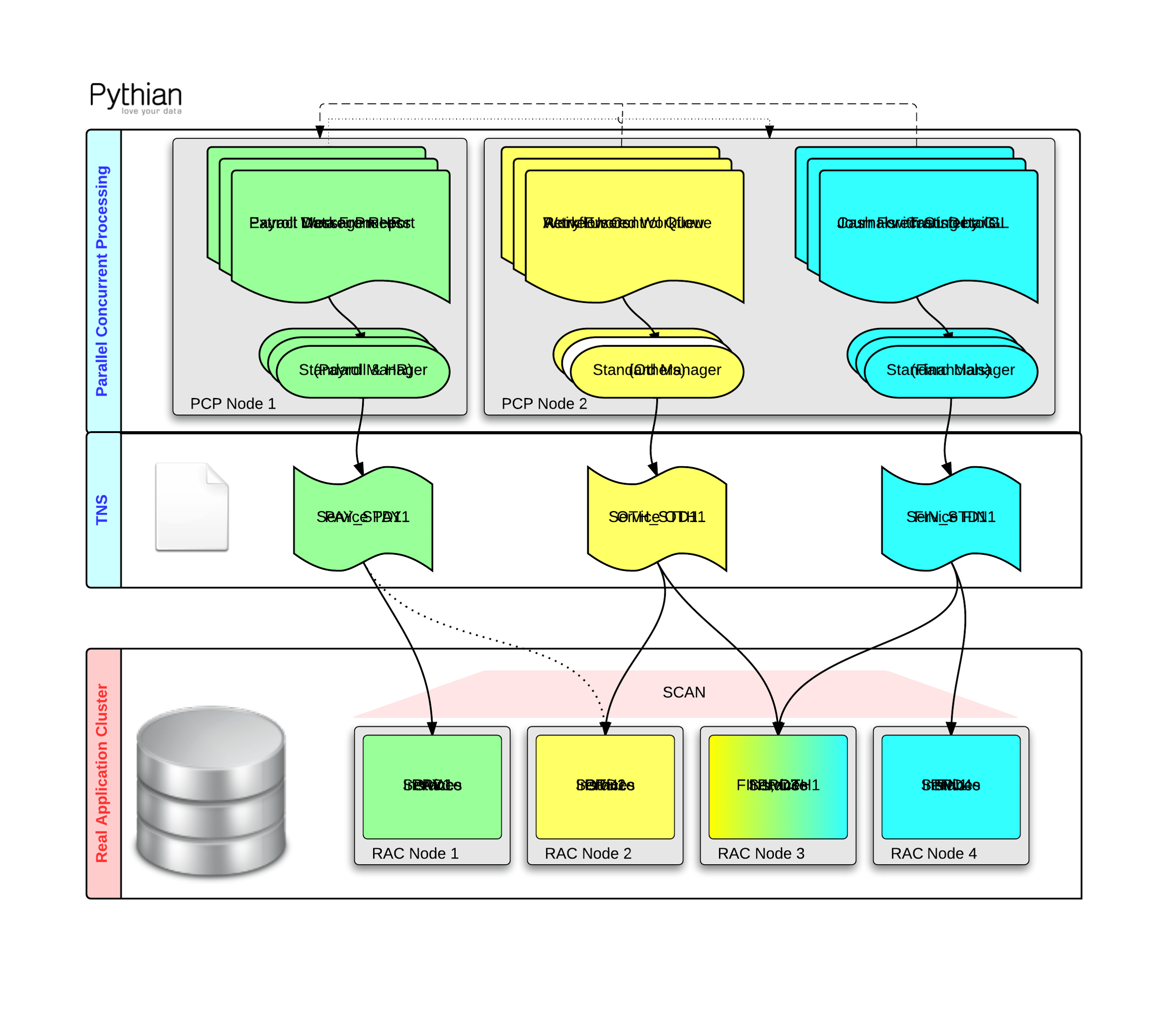

I think that there should be an option to assign a tns alias (service) to an individual Concurrent Manager. It would significantly simplify the concurrent processing configuration in the RAC environments. Have a look at the diagram below. Let me go through some of the key components here:

- I have highlighted different module’s related components with different colors (e.g. payroll and human resources – green, finance – blue, etc.) to make it easier to understand.

- As soon as there is the option (as a result of enhancement request 4159920 implementation) to assign a tns alias to a concurrent manager, it will address the main limitations of the current configuration options listed in the first part of this blog.

- We don’t need to worry about the number of application servers. Two application servers for redundancy purposes should be enough in a minimal configuration. Concurrent managers could establish connections to as many database instances as necessary.

- The solution leverages Oracle Net Services. The fail-over configuration is done on the database side on a per service basis, which allows you to design and implement a fail-over plan well before a fail-over happens. Fail-overs happen without any human intervention preventing chaotic resource utilization.

- It is simpler than assigning a “Target Instance” parameter to each concurrent program. Most EBS environments have queues and incompatibility parameters defined already. The rest is simple. Just add a tns alias and assign it to the concurrent manager. A single service or tns entry needs to be added to adjust the configuration if necessary.

- In addition, the solution allows several other benefits, including:

- A single service could be load balanced over several RAC instance, if necessary.

- It is very easy to switch a service to be executed on one or set RAC instances or exclude an instance from executing concurrent processing.

- Most of the changes could be executed dynamically without stopping or restarting concurrent managers or changing configuration files.

- Services is an important Oracle 11GR2 Cluster concept and should be leveraged by any application running on RAC.

- Resource manager could be used to limit the amount of resources available to a certain module at any time during the life cycle. This is especially important when a database cluster, for one reason or another, runs in limited capacity.

- Resources (e.g. CPU, IO, etc) could be controlled per service defined (e.g. payroll, finance, etc.).

- To my knowledge, you can implement the feature without making many architectural changes. In fact, it looks to me like the “Target Instance” parameter is a more complex change as each concurrent request executed on a concurrent manager may need to create a connection to a different database instance. Proposed solution:

- Internal Concurrent Manager should be able to monitor all concurrent managers the way it did before.

- Service Managers should have no problem starting the concurrent managers by setting the appropriate TWO_TASK value.

- Service Monitors monitors Internal Concurrent manager the same way it does today.

- Just in case you have difficulties to understand the diagram let me to list the components used:

- Payroll Worker Process, Payroll Message Report, etc – concurrent programs grouped using incompatibility parameters to be executed by one Concurrent Manager.

- “Standard Manager (Payroll & HR)”, “Standard Manager (Financials)”, etc – are separate concurrent managers (queues).

- PAY_STD1, OTH_STD1, FIN_STD1 – tnsnames.ora file’s entries on Application servers’ side.

- PAY1, OTH1, FIN1 – services defined on the database cluster side. Each services has one or several primary instances and one or several fail-over instances. Each services can have certain resources assigned by Resource manager.

- RAC Node 1/2/3/4 – are RAC cluster nodes.

- Instance PRD1/2/3/4 – are instances running on the RAC nodes.

- PCP Node 1/2 – are concurrent processing applications nodes.

- Please note that

- There are less applications nodes than database nodes as concurrent processing are not dependent on what application node it is running anymore.

- Because of PCP the concurrent managers can still fail over to other application node based on “Primary Node” and “Secondary Node” settings.

I am very interested to hear your thoughts and experience on how to configure and use Concurrent Processing in Real Application Cluster environments. Please feel free to comment or even send me an email or a message directly. Please keep in mind Dell engineers’ enhancement request 4159920 APPSRAP:PCP/CONCURRENT MANAGER – RAC NODE AFFINITY & LISTENER LOAD BALANCING. If you think this is an option your organisation could leverage from, feel free to log a SR with Oracle Support and ask to add your business case to the enhancement request/bug description.

Yury

![]()