Tracking data flow mechanics of IoT, or a piece of "Pi-oT"

A piece of pi(e): "In contrast to a piece of cake, means a task that looks easy but once you get into it is actually very difficult or time-consuming." - Urban dictionary

In the early days of my career, I supported development in IBM Message Queue (MQ) to broker Swift messages between financial institutions. Swift is an ISO-established messaging standard allowing for inter-company transactions identified as financial instruments or services.

Fast forward to the present day of the Internet of Things (IoT) and the prevalence of internet-connected devices rapidly taking a more active part in decisioning as we go about our daily lives. Like it or not, these devices are here to stay. Before I go on to explore how exactly our data is fed into the cloud (spoiler alert, MQTT message protocol), I would like to briefly describe a great reference case in use at a mining company. No, not the bitcoin variety, the extraction of raw materials to produce the very foundations we use and see around us living in creation and running towns and cities.

In our interactions, wherever we may be, more likely than not we are never far from a sensor, monitor or device that triggers an event based on the localized environment, sending bursts of data to a remote environment for storage, trend processing and predictive analytics. IoT data can be treated as a realtime ingestion pipeline into cloud platforms, enriching existing business insight with streaming sensor data. The device, network infrastructure and sensor data must be resilient and secure enough to allow accurate readings to be processed, allowing preventative actions provided as part of an end-to-end Enterprise Data Platform.

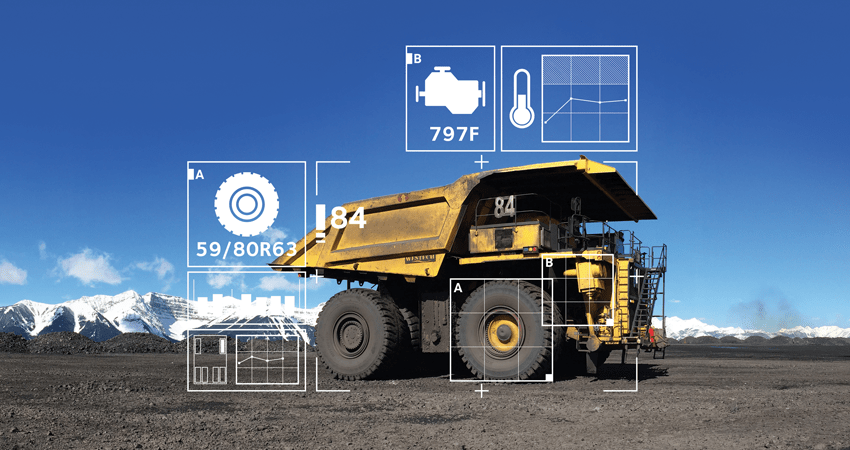

Now imagine if you will: sensor data being collected from mining trucks. A vehicle breakdown of just one of these massive vehicles costs time and crucially, money. No detail is too small. Sensors that remotely monitor wear and tear on the tires alone could save nearly $1M annually by planning for maintenance ahead of component failure.

One of Canada's largest diversified mining companies has major business units focused on copper, metallurgical coal, zinc, gold and energy. Its mining haul trucks are some of the biggest and most costly trucks to operate.

A modern mining haul truck is a million pound IoT device on wheels. Because operating them represents 40 percent of mining site costs, these trucks need to keep moving day and night to earn their keep. There is a need to harness the power of the sensor data to predict costly issues like electrical and suspension failures before they occur.

Pythian leveraged Google Cloud Platform (GCP) to analyze vast amounts of operational telemetry data to produce machine learning models. These models could predict events and recommend actions to optimize haul truck operations. Further details on this particular use case available on our

Pythian blog.

So there's a fantastic case for IoT outside the spooky, voice-activated overlords changing the hue of our living room lights.

Unfortunately, not everyone has access to an oversized Tonka truck!

However, don't pack away your toys just yet, there is another way. Irrespective of the use case, the IoT data architecture remains, for all intents and purposes, consistent. What if we could tap into a device of our own choosing, have it send its data harvest to the cloud for onward analysis and in so doing, study the data flow mechanics of IoT data ingestion in more depth?

In partnership with Google, Coral has produced an

Environment Sensor board that collects atmospheric data such as ambient lighting, temperature, pressure, humidity and, well that's about all. It may also be extended to collect data from other sensors though that's a post for another time. The board includes a secure cryptoprocessor with Google keys to enable connectivity with

Google Cloud IoT Core services, allowing a secure connection to and from the device and then collect, process, and analyze the sensor data.

This post is not to walk you through the process of configuring devices, as that is widely documented. For reference, I used the

GCP Cloud IoT Core community tutorial with a Raspberry Pi 4b.

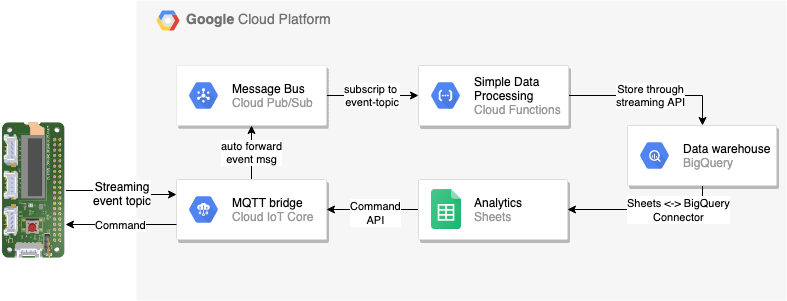

Following the tutorial, I was able to send my on-premises sensor data relatively quickly from the Pi to Big Query via Cloud IoT Core and Pub/Sub.

|

|

|

Sensor readings

|

BQ table ingestion

|

Whilst the data inputs are not particularly astounding due to the Pi being in a well-lit office environment, we have at least managed to create a pipeline and can investigate the Cloud IoT Core data points, which would be similar in concept for many industrial Edge devices.

MQ Telemetry Transport (MQTT) is an ISO standard publish-subscribe machine-to-machine network protocol. Developed by IBM, its intent is for IoT messaging where network and compute performance is often constrained. Despite its implied connection with MQ Series, MQTT is not actually a message queue. A message queue stores messages indefinitely until they are consumed. That is to say, the message persists in an ordered queue until picked up. In MQTT, messages can expire if there are no subscribers.

In a message queue, a message is picked up and processed by one consumer. In MQTT every active subscriber receives the same message.

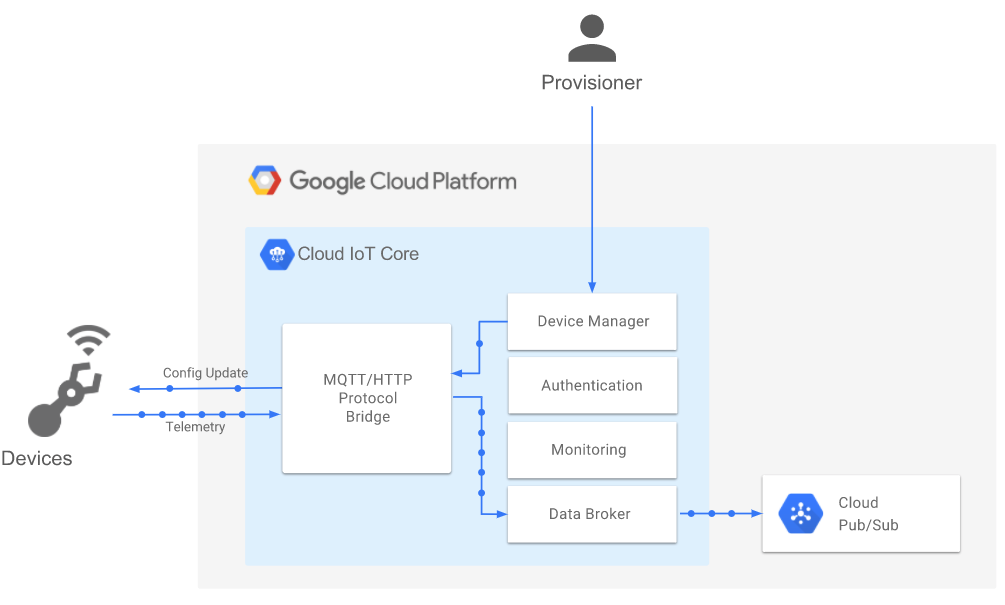

GCP Cloud IoT Core runs a managed MQTT broker.

QoS

The MQTT specification describes three Quality of Service (QoS) levels:

-

QoS 0, delivered at most once

-

QoS 1, delivered at least once

-

QoS 2, delivered exactly once

At the time of writing, Cloud IoT Core does not support QoS 2; subscribing to a topic using QoS 2 effectively downgrades QoS to 1.

The Cloud IoT Core MQTT bridge maintains a small buffer of undelivered messages in order to retry them. If the buffer becomes full, the message with QoS 1 may be dropped and a PUBACK message will not be sent to the client. The client is expected to resend the message.

Security

Of course, when deploying IoT devices, security must be front and center of the client conversation.

Rather than being stored in a secure datacenter away from an opportunistic data theft, IoT devices are often deployed out in the field close to the machines or equipment to be monitored. In use, they may be subject to hacking or other means to falsify data collection. The factory setting for the Environmental Sensor Board for the Pi as configured includes one Google key (private key, public key, and certificate) to enable communication with

Google Cloud IoT Core using asymmetric key authentication. Devices must verify Cloud IoT Core server certificates to ensure they are communicating with Cloud IoT Core rather than an impersonator.

The CA certificate is used to establish the chain of trust to communicate with Cloud IoT Core using the Transport Layer Security (TLS) protocol.

Once a device is successfully configured with an MQTT client and connected to the MQTT bridge, it can publish a telemetry event

Additional attributes added to the message include:

|

Attribute

|

Description

|

|

deviceId

|

The user-defined string identifier for the device, for example, enviro-board. The device ID must be unique within the registry.

|

|

deviceNumId

|

The server-generated numeric ID of the device. When you

create a device, Cloud IoT Core automatically generates the device numeric ID; it's globally unique and not editable.

|

|

deviceRegistryLocation

|

The Google Cloud Platform region of the device registry, for example, us-central1.

|

|

deviceRegistryId

|

The user-defined string identifier for the device registry, for example, enviro-registry.

|

|

projectId

|

The string ID of the cloud project that owns the registry and device.

|

|

subFolder

|

The subfolder can be used as an event category or classification. For MQTT clients, the subfolder is the subtopic after device_id/events

|

The IoT core Device Manager serves to register one or more devices to the service, enabling monitoring and device configuration. Device telemetry data is forwarded to a

Cloud Pub/Sub topic, which can then be used to trigger

Cloud Functions. Once the ingested IoT data is readily available in Cloud Pub/Sub it may be used for onward processing. For example, in an Enterprise Data Platform offering granular, flexible access controls and governance to ensure the integrity and confidentiality of data across the entire organization.

Ingestion of the IoT piece of pie may still be time-consuming per the Urban Dictionary definition. Yet with the right steps in place, pipelines can be operationalized to securely stream sensor data from multiple devices into the GCP cloud and managed in its entirety by a cloud-native

Enterprise Data Platform by Pythian.

A future post will look to perform ML inferencing from that sensor data. Plenty of more pieces left - another slice of pi, anyone?