Using Docker to provide self-service database patching

I've been looking into how Vagrant and/or Docker can be used to improve the life of DBAs and maybe others, too. I did this mostly to practice using these tools - especially Docker - that are becoming more and more important in the modern IT landscape. I decided to set up a configuration that allowed for patching the database by starting a Docker container from an image that provides a patched version of Oracle software, and I was surprised by how simple it was.

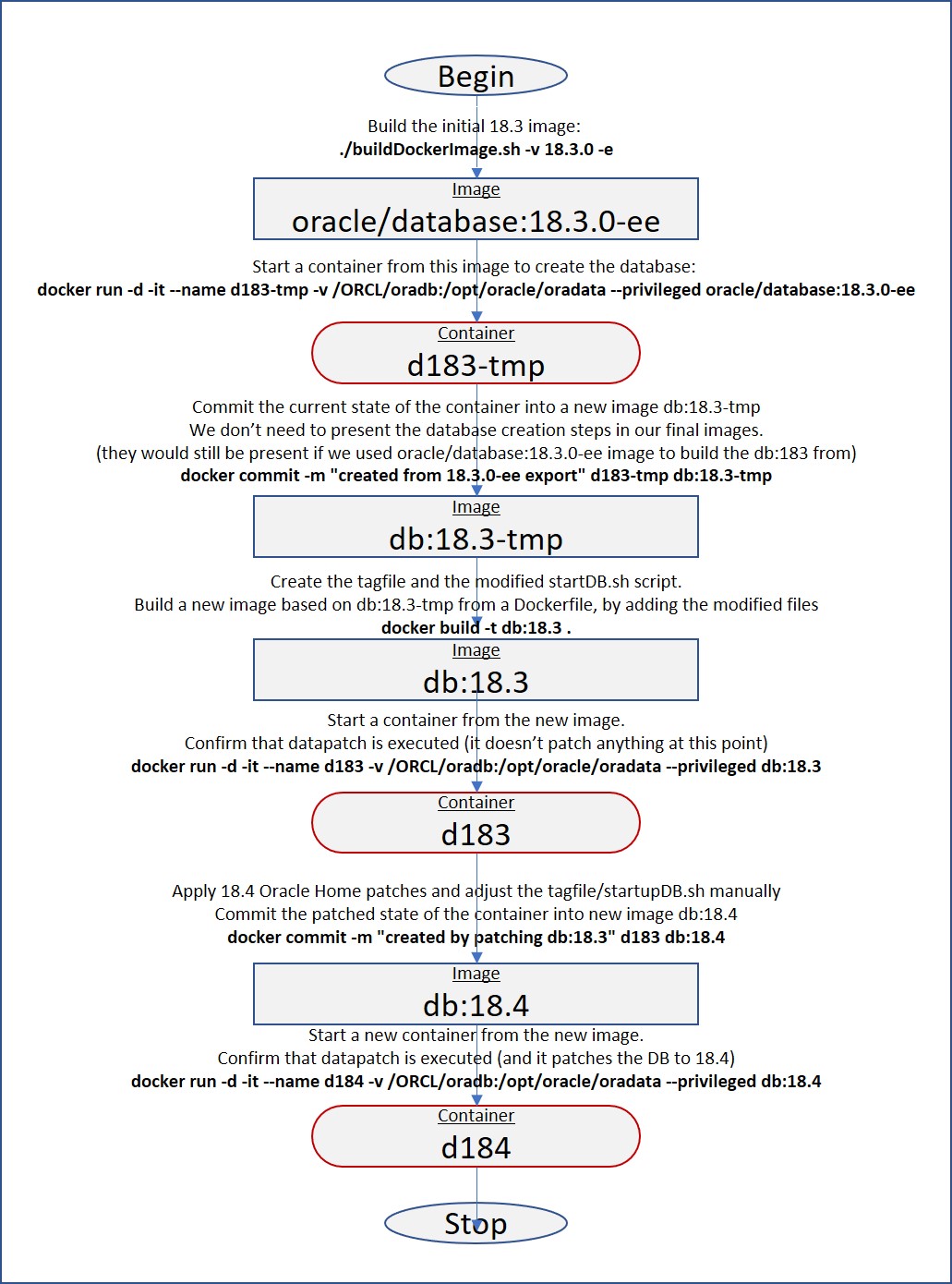

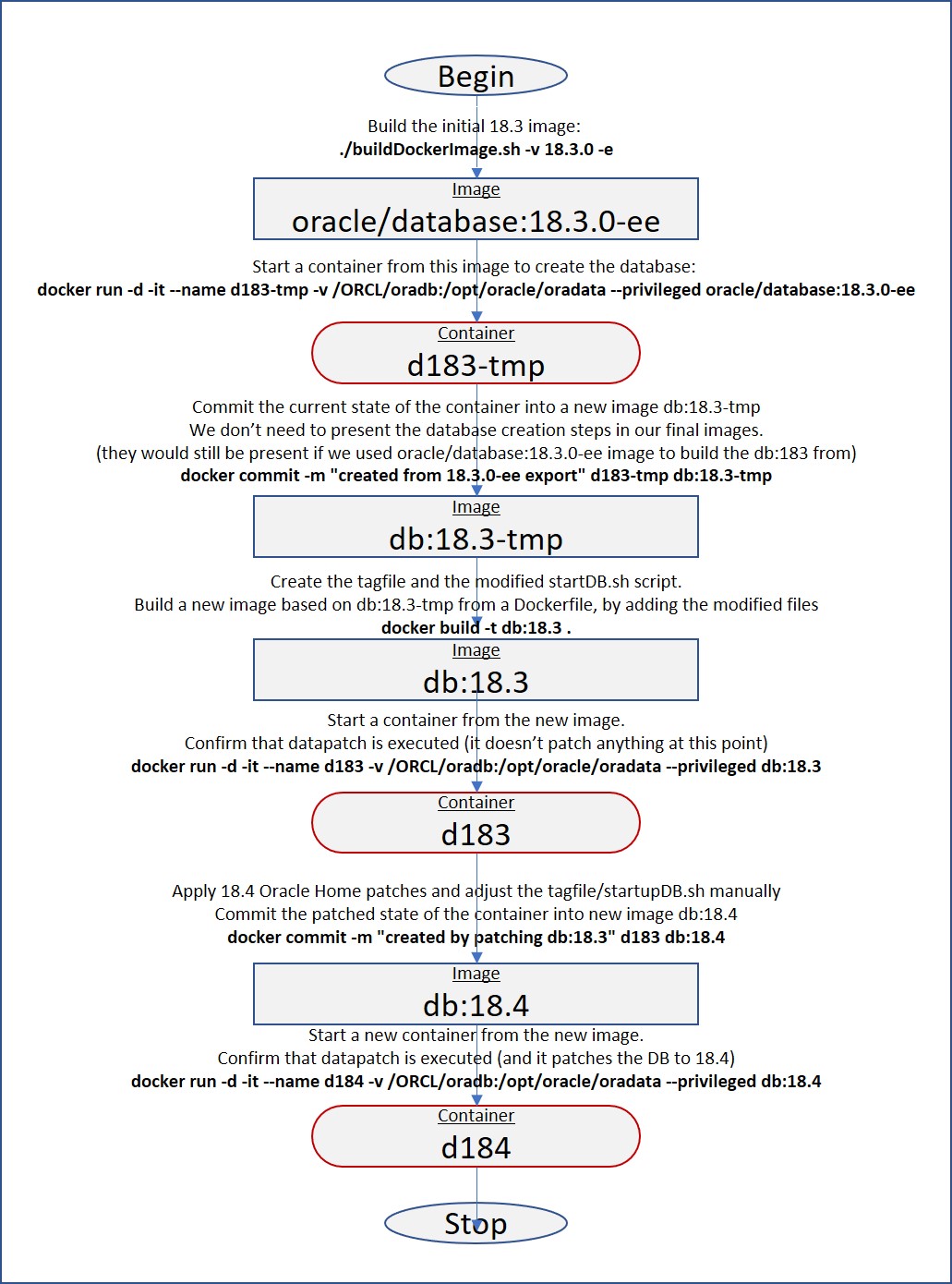

Overview of creating the db:18.3 and db:18.4 images.[/caption] After completing the whole process. You'll have two Docker containers - d183 and d184 - that you can start interchangeably to patch or de-patch the database between versions 18.3 and 18.4. This approach should also work with the majority of other patches, not only with the release update patches as displayed in this post. Additionally, you should be able to create more databases by following these steps:

Overview of creating the db:18.3 and db:18.4 images.[/caption] After completing the whole process. You'll have two Docker containers - d183 and d184 - that you can start interchangeably to patch or de-patch the database between versions 18.3 and 18.4. This approach should also work with the majority of other patches, not only with the release update patches as displayed in this post. Additionally, you should be able to create more databases by following these steps:

The vision

It's important to remember that the changes made inside a Docker container are ephemeral, so it's expected to lose them when the container is destroyed. Obviously, we wouldn't like to lose our database if the container was destroyed, thus, the data files have to be stored outside the container and should be attached to the container using a Docker volume. Additionally, the plan is to stop the old container and start a new one with a different version of DB software in it - here, too, the data files need to be located outside the container, otherwise, they would not be accessible to the other container we'd start. In this proof of concept implementation, I want to be able to detect if a "datapatch" command needs to be run in the database upon startup. This would need to happen only when the patch level of the RDBMS Oracle Home changes, or in our case, when the DB is started from a container created from a different version of the Docker image. Of course, we could run datapatch every time the database is started as it takes quite a bit of time to complete. It's also a little bit tricky to figure out if the datapatch needs to be run having access only to the data files of the database, thus I'll do it the following way:- Each version of the Docker image will also hold a "tagfile" that keeps a version number of the Docker image.

- At the startup of the container, the local tagfile is compared to the tagfile stored along with the data files on an external volume. If they are not the same, the datapatch needs to be executed. The local tagfile is copied to the external volume after completing the datapatch run.

Vagrant

I will be building this on my Windows 10 Home (Hyper-V not supported) laptop, so I can't run Docker on it natively. I'll use Vagrant to build a VirtualBox VM that will be my Docker "machine" to work around this limitation, and all further work will happen on that VM. I'm using MobaXterm as my terminal software, so the commands you'll see here will look Linux-like (they could even work if you copy 1-to-1 and execute on your Mac OS), although they are executed on Windows. After installing Vagrant and Virtualbox, the first thing we'll want to do is download the latest Oracle Linux Vagrant box that will be used to create the Docker "machine" VM:vagrant box add --name ol-latest https://yum.oracle.com/boxes/oraclelinux/latest/ol7-latest.box

mkdir -p ~/96_VAGRANT/OL-docker

cd ~/96_VAGRANT/OL-docker

The "~/96_VAGRANT/OL-docker" will serve as the working directory for this whole project. Please continue by uploading the following files to this directory. These will be required to create the initial Docker image, and patch the Oracle RDBMS software to create the second version of the container image.

- LINUX.X64_180000_db_home.zip - Obtain it from Oracle Software Downloads page. Pick the Oracle Database 18c (18.3) ZIP file for Linux x86-64.

- p28689117_180000_Linux-x86-64.zip - Combo of OJVM Component Release Update 18.4.0.0.181016 + Database Oct 2018 Release Update 18.4.0.0.181016. Obtain it from My Oracle Support.

- p6880880_180000_Linux-x86-64.zip - Latest version of 18c OPatch. Obtain it from My Oracle Support.

java -jar getMOSPatch.jar patch=28689117,6880880 platform=226P regexp=.*_180000_Linux-x86-64.* download=all Once you have the files in place, create the "Vagrantfile" by running this code block:

cat << EOF > Vagrantfile

disk_size = 100*1024

disk = "ORCL.vdi"

Vagrant.configure("2") do |config|

config.vm.box = "ol-latest"

config.vm.network "private_network", ip: "192.168.33.13"

config.vm.provider "virtualbox" do |vb|

vb.memory = "8196"

unless File.exist?(disk)

vb.customize ['createhd', '--filename', disk, '--size', disk_size]

end

vb.customize ['storageattach', :id, '--storagectl', 'SATA Controller', '--port', 2, '--device', 0, '--type', 'hdd', '--medium', disk]

end

config.vm.provision "shell", inline: <<-SHELL parted /dev/sdc mklabel msdos parted /dev/sdc mkpart primary btrfs 1 100000 mkfs.btrfs /dev/sdc1 echo "/dev/sdc1 /ORCL btrfs defaults 0 0" >> /etc/fstab

mkdir /ORCL

mount /ORCL

mkdir /ORCL/docker /ORCL/oradb

chown -R vagrant:vagrant /ORCL

chmod -R 777 /ORCL

yum install -y docker git unzip

sed -i.bck -e "s,selinux-enabled,selinux-enabled --graph /ORCL/docker,g" /etc/sysconfig/docker

systemctl start docker.service

systemctl enable docker.service

usermod -a -G docker vagrant

SHELL

end

EOF

The Vagrantfile defines the following settings:

- 192.168.33.13 - IP address will be assigned to the private network.

- ORCL.vdi - A 100G disk will be added as /ORCL to the VM. I'll use this disk to store all Docker stuff in it, as well as the data files of the database too. The space will be allocated dynamically, and based on my testing, it will consume 41G of space at the end of the process.

- The new disk will be formatted with BTRFS - I had some issues with EXT4 and EXT3 during the testing. Some of the files in the Docker container weirdly disappeared (I did not find the reason for that yet, but I found BTRFS was working okay. It might have something to do with the Layered FS that Docker depends on, but I'm not sure).

- Docker, git and unzip will be installed. As well, Docker is reconfigured to move its files to the new disk. Then the Docker service is also started.

vagrant up

ssh -i ~/96_VAGRANT/OL-docker/.vagrant/machines/default/virtualbox/private_key vagrant@192.168.33.13

Building the first Docker image

There's nothing really specific about the way I build the initial Docker image for the 18.3 database. I'm basically running the "buildDockerImage.sh" script as Gerald Venzl suggests in the documentation. The only considerable difference is modification of the installDBBinaries.sh script to remove lines that contain "rm -rf $ORACLE_HOME/..". Apparently some directories from Oracle Home are being removed to reduce the size of the container, but it creates a problem, as Opatch fails to apply patches. I'm also copying / extracting the required patches to the /ORCL disk, as this location will be made available to the running containers.cd

git clone https://github.com/oracle/docker-images.git

mkdir /ORCL/oradb/patch/

cp /vagrant/p6880880*.zip /ORCL/oradb/patch/

unzip -q -d /ORCL/oradb/patch /vagrant/p28689117_180000_Linux-x86-64.zip

sed -i.back -e '/rm -rf $ORACLE_HOME/d' ~/docker-images/OracleDatabase/SingleInstance/dockerfiles/18.3.0/installDBBinaries.sh

cd ~/docker-images/OracleDatabase/SingleInstance/dockerfiles

cp /vagrant/LINUX.X64_180000_db_home.zip 18.3.0/LINUX.X64_180000_db_home.zip

./buildDockerImage.sh -v 18.3.0 -e

rm 18.3.0/LINUX.X64_180000_db_home.zip

The completion of the build script will leave us with a prepared "oracle/database:18.3.0-ee" Docker image. There is no database yet, it will be created the first time a Docker container is started from this image. Let's start it up, by mapping /ORCL/oradb as /opt/oracle/oradata to the container, thus, all datafiles stored in /opt/oracle/oradata will actually be stored outside of the container in /ORCL/oradb direcotry of the VM.

docker run -d -it --name d183-tmp -v /ORCL/oradb:/opt/oracle/oradata --privileged oracle/database:18.3.0-ee

docker logs d183-tmp -f

# Ctrl-C when done

docker stop d183-tmp

docker commit -m "created from 18.3.0-ee export" d183-tmp db:18.3-tmp

The first command triggers the startup of the container named d183-tmp, and, as the database is not yet created, it will also take some time to create the DB. You'll be able to follow it happening in the logs by running the second command. Once the DB is ready, the Docker container is stopped, and a new image named "db:18.3-tmp" from the current state of the container is created.

Adding the "datapatch" run to the 18.3 image

To facilitate database patching or de-patching, the "datapatch" command needs to be executed. I'll build a new container image based on "db:18.3-tmp" for that. Remember, all these activities still happen inside the Vagrant VM we created earlier. The "docker build" command will be used to create the new container. Let's prepare the required files in a separate directory - db-18.3:- Create the directory, and copy the startDB.sh

mkdir ~/db-18.3 && cd ~/db-18.3 cp ~/docker-images/OracleDatabase/SingleInstance/dockerfiles/18.3.0/startDB.sh .

- Create the Dockerfile. The Dockerfile contains build instructions for the new image, in this case they are very simple - - use image "db:18.3-tmp" as the source and copy files "tagfile" and "startDB.sh" file to ${ORACLE_BASE} directory (the variable is defined in one of the earlier layers of the image, and its value is preserved). These are the contents of the "Dockerfile":

echo " FROM db:18.3-tmp as base COPY tagfile \${ORACLE_BASE}/ COPY startDB.sh \${ORACLE_BASE}/" > Dockerfile - Create the "tagfile" with the following contents:

echo "db:18.3" > tagfile

- Adjust the "startupDB.sh" to add the following lines right above the "# Start Listener" line. This additional logic ensures that datapatch is executed when the container is started and if the external tagfile does not match the tagfile of the container. Note, the datapatch is executed twice for 18.3 container to workaround a known issue that causes an incomplete patch rollback if it's executed just once. The code block below adjusts the file as per this description.

LISTENER_LINE=$(grep -n "# Start Listener" ~/docker-images/OracleDatabase/SingleInstance/dockerfiles/18.3.0/startDB.sh | cut -d: -f1) head -$(expr ${LISTENER_LINE} - 1) ~/docker-images/OracleDatabase/SingleInstance/dockerfiles/18.3.0/startDB.sh > startDB.sh echo " touch \$ORACLE_BASE/oradata/tagfile cmp --silent \$ORACLE_BASE/oradata/tagfile \$ORACLE_BASE/tagfile || { sqlplus / as sysdba << EOF STARTUP; ALTER PLUGGABLE DATABASE ALL OPEN; exit; EOF echo Running datapatch \$ORACLE_HOME/OPatch/datapatch -verbose \$ORACLE_HOME/OPatch/datapatch -verbose # remove for non-18.3 # cp \$ORACLE_BASE/tagfile \$ORACLE_BASE/oradata/tagfile sqlplus / as sysdba << EOF SHUTDOWN IMMEDIATE; exit; EOF } " >> startDB.sh tail -n+${LISTENER_LINE} ~/docker-images/OracleDatabase/SingleInstance/dockerfiles/18.3.0/startDB.sh >> startDB.sh chmod u+x startDB.sh

docker build -t db:18.3 .

docker rm d183-tmp

docker run -d -it --name d183 -v /ORCL/oradb:/opt/oracle/oradata --privileged db:18.3

docker logs d183 -f

# Ctrl-C when done

Creating the 18.4 image from the 18.3 container

In order to provide a Docker image for the 18.4 database, we patch the Oracle Home in the current container "d183". We'll modify the tagfile and startDB.sh, and we'll commit the container into a new image db:18.4. The first thing to do is the database software patching. We already have the patch files available on /ORCL, but they're still owned by root. We need to connect to the Docker container as root (-u 0), and change the ownership of the files:docker exec -u 0 -it d183 bash

chown -R oracle:oinstall /opt/oracle/oradata/patch

Additionally, the tagfile and the startupDB.sh need to be modified. We're changing the version tag in the tagfile and removing the second datapatch run from the startDB.sh file:

echo "db:18.4" > /opt/oracle/tagfile

sed -i.back -e '/# remove for non-18.3 #/d' /opt/oracle/startDB.sh

exit

Next, we connect to the same container as the default user (Oracle) and will perform the normal patching activities of the database software - replace the OPatch with the new one, stop the DB and the listener and apply the 18.4.0.0.181016 DB and OJVM release update patches. No need to start the DB at this point, as that and the datapatch run will be done during the startup of the container:

docker exec -it d183 bash

cd $ORACLE_HOME

rm -rf OPatch

unzip -q /opt/oracle/oradata/patch/p6880880_180000_Linux-x86-64.zip

export ORACLE_SID=ORCLCDB

ORAENV_ASK=NO

. oraenv

sqlplus / as sysdba << EOF

shut immediate;

exit;

EOF

lsnrctl stop

cd /opt/oracle/oradata/patch/28689117/28655784/

opatch apply -silent

cd /opt/oracle/oradata/patch/28689117/28502229/

opatch apply -silent

exit

Now, the container "d183" currently contains the exact contents we want to have in our 18.4 version of the Docker image - db:18.4. Luckily, it's easy to create an image from a running container. Let's do that, and let's remove the d183 container (because it contains 18.4 software, thus the name is confusing). We'll also remove the old 18.3-tmp image because it is no longer needed.

docker commit -m "created by patching db:18.3" d183 db:18.4

docker stop d183

docker rm d183

docker image rm db:18.3-tmp

docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

db 18.4 320eeb4c0b96 6 seconds ago 14.3GB

db 18.3 f0b291b882cb 2 hours ago 10.4GB

oracle/database 18.3.0-ee b761c85e8061 3 hours ago 10.3GB

oraclelinux 7-slim b8b00d5b0a75 2 weeks ago 117MB

Does it really work?

It's easy to test it, we just need to create the containers for each version of the database image - "db:18.3" and "db:18.4", then we can start and stop them interchangeably to observe how the datapatch upgrades or downgrades the database. You can keep both containers around, but only one of them is allowed to be started at the same time.docker run -d -it --name d184 -v /ORCL/oradb:/opt/oracle/oradata --privileged db:18.4

docker logs d184 -f

# Datapatch is expected

# Ctrl-C when done

docker stop d184 && docker logs d184

docker run -d -it --name d183 -v /ORCL/oradb:/opt/oracle/oradata --privileged db:18.3

docker logs d183 -f

# Datapatch is expected to remove 18.4 patches

# Ctrl-C when done

docker stop d183 && docker logs d183

docker start d184 && docker logs d184 -f

# Datapatch is expected to apply the 18.4 patches again

# Ctrl-C when done

docker stop d184 && docker logs d184

docker start d184 && docker logs d184 -f

# Datapatch is NOT expected as we started from 18.4 container the last time too

# Ctrl-C when done

docker stop d184

The full outputs of all commands executed in this blog

are available here.

Summary

The blog post turned out to be longer than I expected, and it might make you think the process is more complicated than expected. Please, take a look at this flowchart describing the main tasks that were performed - there's really not too much going on! [caption id="attachment_105368" align="alignnone" width="1031"] Overview of creating the db:18.3 and db:18.4 images.[/caption] After completing the whole process. You'll have two Docker containers - d183 and d184 - that you can start interchangeably to patch or de-patch the database between versions 18.3 and 18.4. This approach should also work with the majority of other patches, not only with the release update patches as displayed in this post. Additionally, you should be able to create more databases by following these steps:

Overview of creating the db:18.3 and db:18.4 images.[/caption] After completing the whole process. You'll have two Docker containers - d183 and d184 - that you can start interchangeably to patch or de-patch the database between versions 18.3 and 18.4. This approach should also work with the majority of other patches, not only with the release update patches as displayed in this post. Additionally, you should be able to create more databases by following these steps:

- Start a new container X from "Oracle/database:18.3.0-ee", map a different location for datafiles.

- Remove the new container X - it will still leave the data files in place.

- Create containers from images db:18.3 and db:18.4 to allow the automated patching that we implemented here.